Since I've been messing with voxels anyway, I wrote an implementation of an alternative 3D rendering method primarily used for medical purposes: Volume rendering.

Volume and voxel rendering are alike in that they both raytrace 3D data (voxel grids). However, in volume rendering, rays aren't cast into the volume to find the nearest filled voxel, but start from the sternmost voxel and are periodically sampled troughout the volume. At every sampled voxel, local normals and lighting are calculated while the voxel is sent through a so-called classification pipeline. Said classification pipeline relates a voxel to a predefined tissue and assigns color and transparency accordingly.

Remember that volume rendering was developped for medical purposes, mainly the visualization of CT-scans, which makes it necessary to filter noise and only display the tissues that are relevant. This is why the computationally expensive classification process is needed.

A quite lengthy GLSL shader does most of the rendering work. To reduce the computational complexity in the shader, color and transparency values for different CT-values are precalculated in BMax and stored in a lookup texture, which replaces the classification algorithm. Even so, the program still runs awfully slow. Normals and lighting have to be calculated for each voxel sampel, which is very expensive. Precalculation is not an option, because a full normal volume would be too big for the VRAM.

A demo would be a bit pointless since it runs only at 10 FPS on my machine (HD 4890) and I'm not sure whether I can distribute the CT scan, but I made a youtube video showing the renderer in action, raytracing a few different tissues of a female head scan: Link

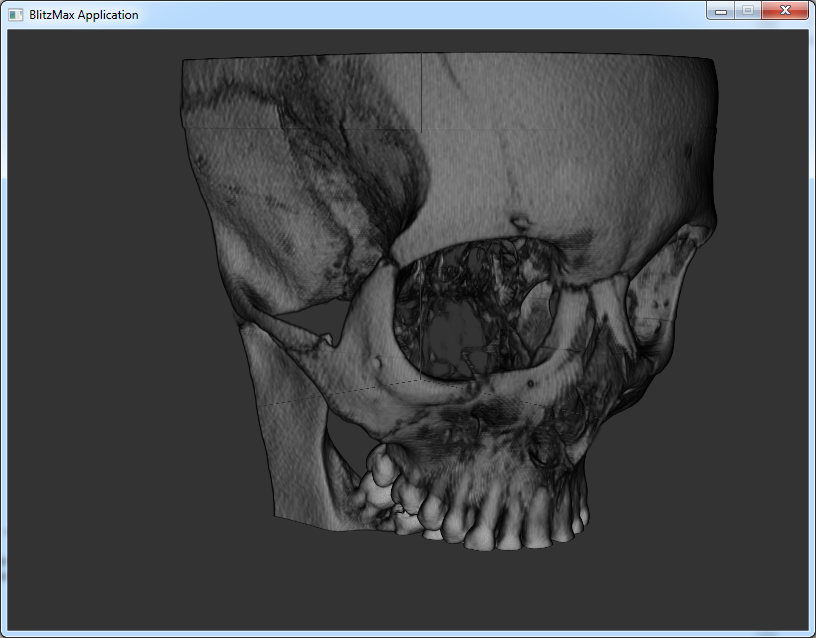

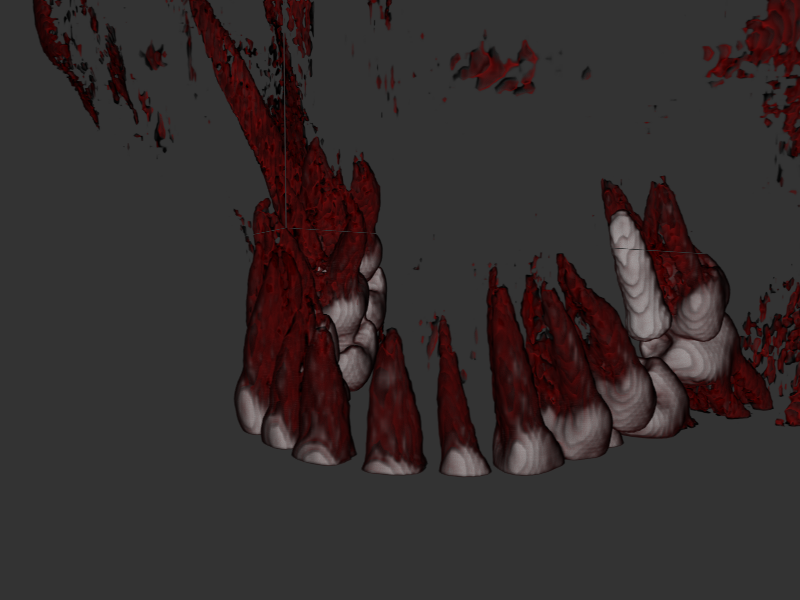

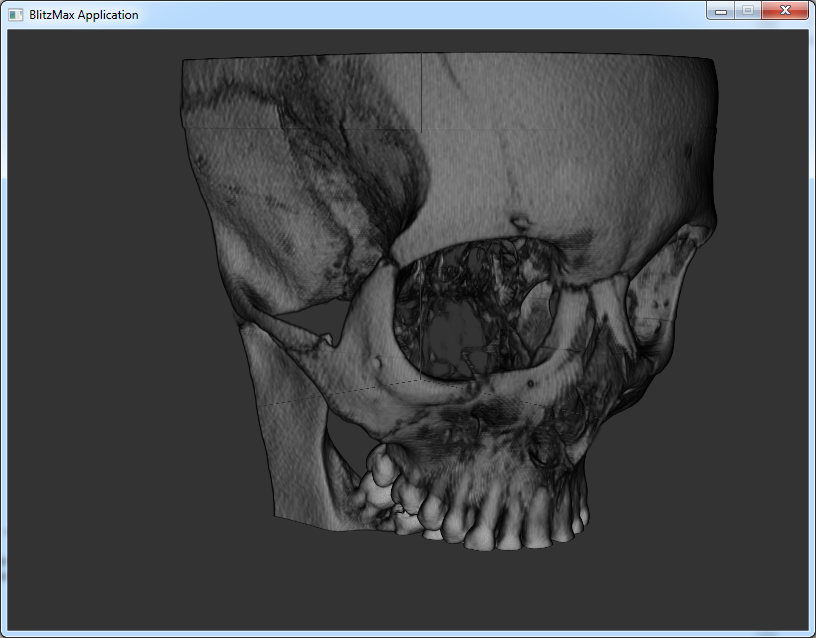

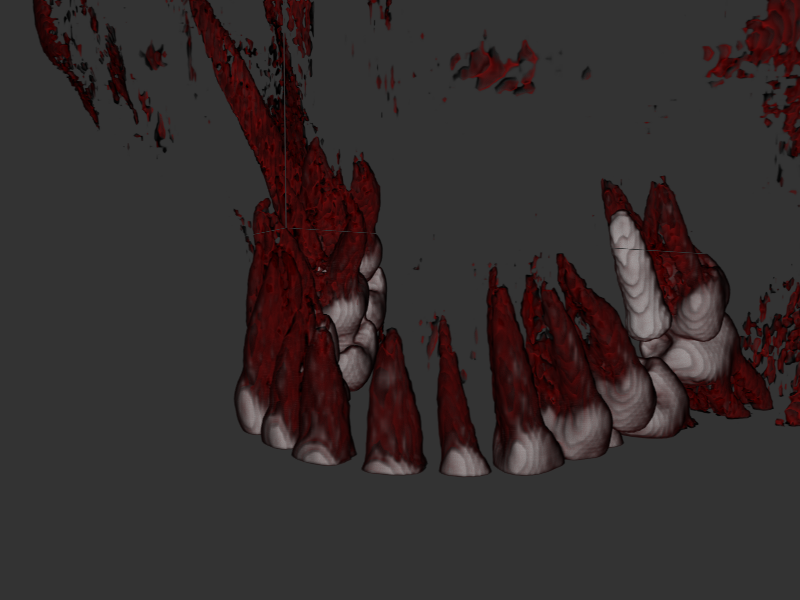

Screenshots:

|