Blitzmax 3D engine + Modelisation Software

Community Forums/Showcase/Blitzmax 3D engine + Modelisation Software

| ||

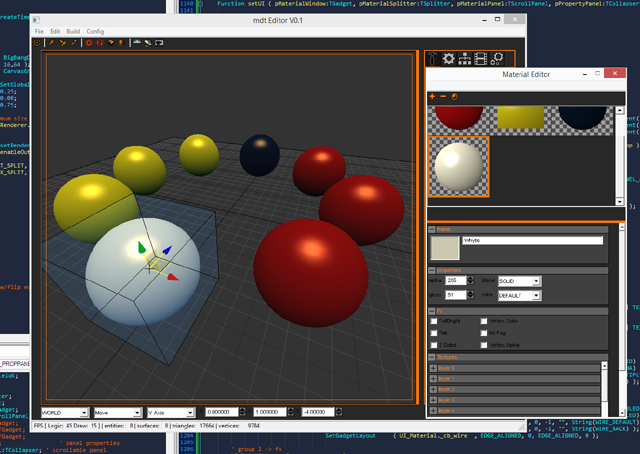

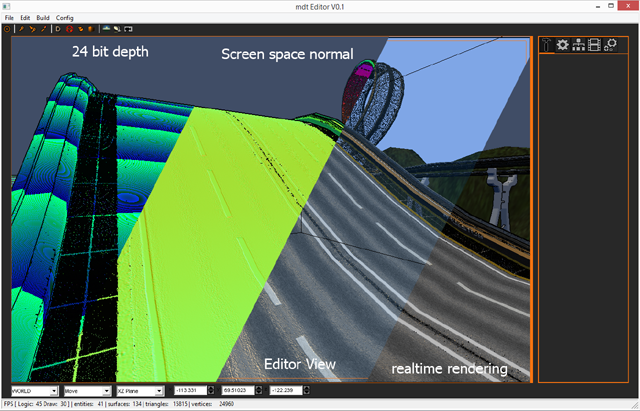

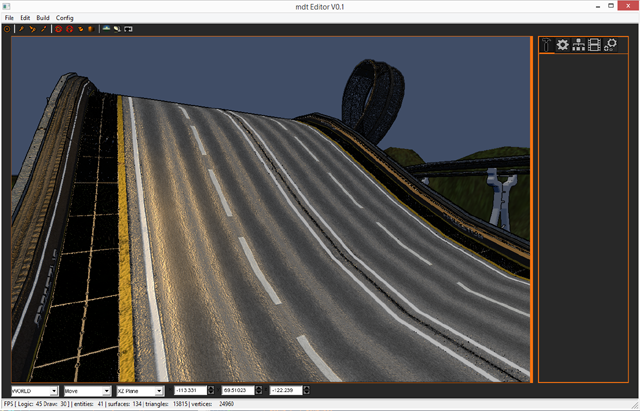

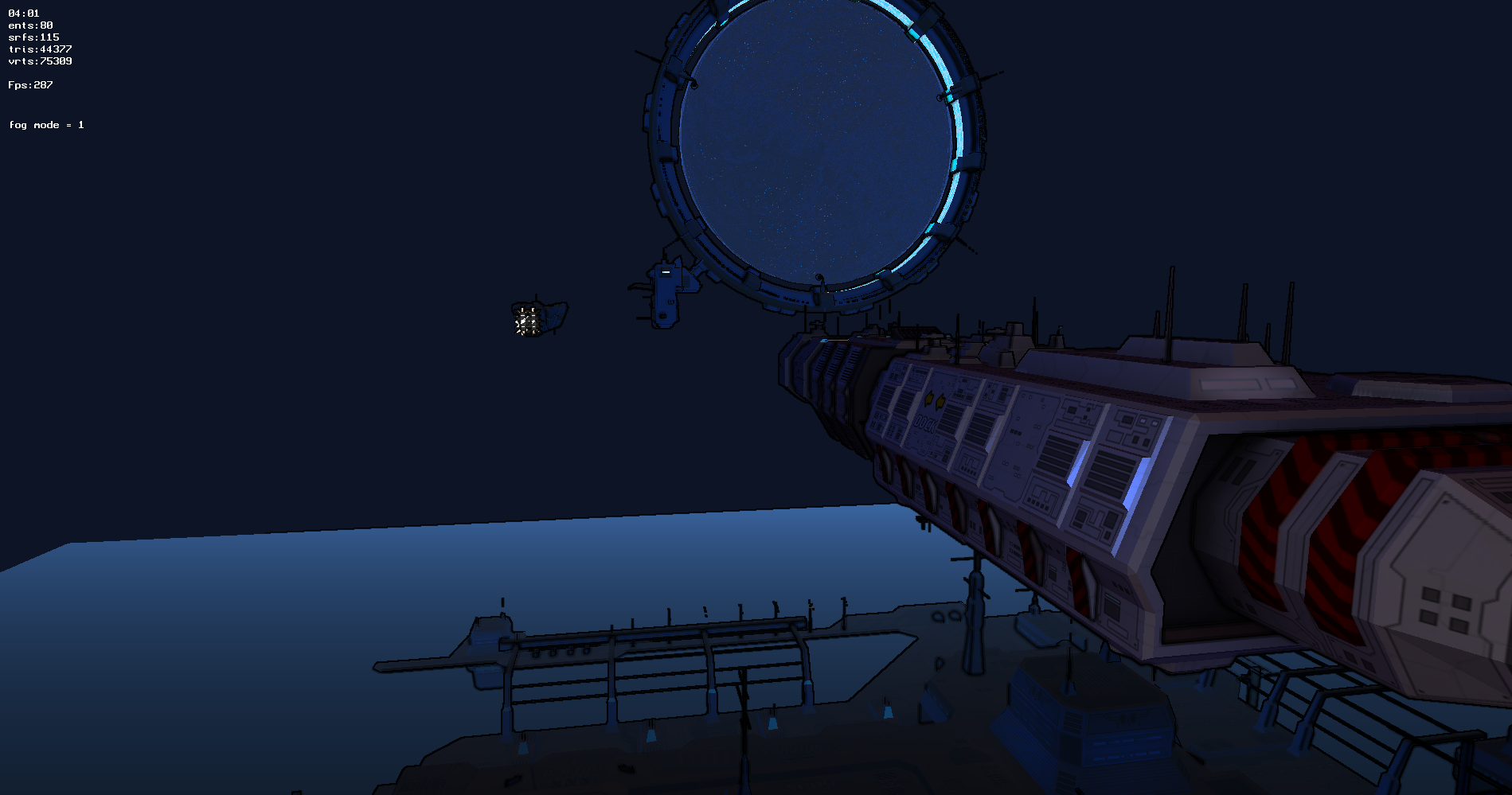

[Click to open the page on a new tab starting from the bottom] About the engine It's a blitzmax engine built as a standalone module - it uses its own version of the glew module and glgraphics, and is built as a real max2d driver So any (or almost) 2D commands works on top of the 3D (with blending, alpha etc...). - it won't be free (but not that expensive - around the 40$ with lifetime updates. Once you nuy it, it's for life) - Still in developpement but reaches the end (it's actually in the beta test stage while building some demos to show up the engine and feeding some samples to work with when it will be released) [Latest Demo in Video - Last update on 2016-10-28th] Almost all procedurally generated Landscape About the the modelisation software (not currently in developpemnt - will be continued when the engine will be released) Kind of a 3ds max replicant, but very limited in features, it is made to create b3d models/scenes without the needs for an expensive framework (like thousands of bill for discreet softwares or ...) - support commun primitives with parametrable details (cube, sphere, torus, cone, cylinder, grid ... more to come) - import/export b3d (it won't support any other format, as long as I don't have the spec for more format and the most frequent are not open-sourced, like fbx) with support for animations and bones - material and texture editor - realtime rendering mode - normal and depth map rendering - outline mode - transformation : move/rotate/scale relative to world/parent/local/screen matrix - 3D powered by the Bigbang engine (GlSl 3.1 engine for blitzmax) that will be distributed as a module for the software owners (Yep, I didn't find any name or logo for the modelisation software yet) The main features the engine doesn't support that blitz3d use : - Per vertex lighting (I only support per pixel lights) - flat shaded rendering (it could be done in opengl direct mode, but as long as the engine only use shaders for rendering, it would require to split all triangles to get this kind of render. It's still possible to acheive, but without more needs for this, I won't implement it) BTW, is there anybody who used the flat mode ? (except for polymaniacs) (Click on the image for Higher resolution)       ps : FPS drawn on the status bar are not what you can acheive with the engine, but only the frame limited fps - the editor is made to consume the less possible the cpu, there is only in rendering mode that it goes up to 45 fps just to test the scene fluidity. In Game (without frame limiting), it's generally faster than the blitz3d engine ... depends on many things (like graphic cards drivers, and some (very few) features are lower on bigbang due to OpenGL restrictions, while almost all others are faster) Update 2016-05-01 WebGL portage Online Demo available here (ECMAScript5 - works on almost all browsers, except Safari on iPad 2) And Another demo Here (ECMAScript6 - not available for all browsers, EcmaScript6 is too new, but will soon be supported anywhere.) |

| ||

| I can't quite make out if this is a 3d modeler or a game engine... I suppose both. Could you post more about character creation and rigging? That's what I'd want it for most. I won't have any use for the engine since it is for BlitzMax which is over my head, so to ask me what it would be worth to me depends on how good it is at character creating and animating and buildings,props etc that I can use with Blitz3d. Just off the top of my head I might buy it within a range of $20.00 to $40.00. Not saying it might not be worth more than that but my budget would preclude paying more. On a side note, it's really cool to see new programs like this once again. DOG |

| ||

| Congrats for the 3d modelisation software, but i am not interested by a modeling software at the moment. (don't want to learn to use a new tool now that i am good at using another...) However i may be interested to use your Bigbang engine in the future if it has the same capabilities than Blitz3d and a similar syntax and if it is stable enough and "future proof". A few questions : What are the advantages of using your Bigbang engine compared to the Blitz3d engine ? I suppose the possibility to use per pixel lighting and shaders ? (bumpmapping ? precise glow of some pixels ? other ?) Is it compatible with most graphics cards (not too old of course), and is it compatible with windows only or also with mac osx ? Can you explain briefly how we are supposed to use this 3d engine with Blitzmax ? (how to install) Can you provide a few code examples to demonstrate how to use it with Blitzmax ? (also to see if the syntax is similar to Blitz3d) Thanks, |

| ||

| Could you post more about character creation and rigging? That's what I'd want it for most. On the current stage, there is no specific tool to build something special, it's designed as 3dsmax, same commands to turn/move etc ... the camera has its "uncentered turn around the pivot" style, it use polygon instead of triangle, but it's intended to be generic. So there is all what we need to build and animate a character, but there is nothing specifically designed to build and animate a character ... if you understand what I mean. Of course, for the future, if there is a demand for specific stuff, I'll add it. << Tell me what you want, I will learn you how to live without it >> :) Is it compatible with most graphics cards (not too old of course), and is it compatible with windows only or also with mac osx ? At the moment, it's windows capable It should be cross-platform, but as I don't own a mac nor a linux machine, I can't test for the moment. I'm actually installing a Ubuntu 15 on a dual-core 2*2.5 Ghz PC (a machine just old enough, with an HD 4780) For the mac version, it will comes later when I'll get a mac :) But what is sure : the engine use OpenGL only with GLSL 330, so it won't be compatible with "very old" graphics card, while it should be compatible with more than 95% of the running machines, according to steam survey for example. (but I assume Steam survey is restrictive and not accurate at all as it essentially benchmark "gamers" machine ^^ ...). Most of the 2010 and later graphics card are GLSL 3.3 capable, so, there is just the 6 years old hardware that won't work. (ps : but a 2005 machine can support a glsl 3.3 capable graphics card that can be found on any store for 20 euros ... so ... it's not really a big deal, as those customers are not 3D customers or they would already have updated their hardware) The Bigbang engine is not made to run some "small blitz3d prototype" but for bigger project. > it supports lots of entities with lots of surfaces, with bump, specular, and can use a space optimizer for rendering LOD (space optimizer released as an external module) What are the advantages of using your Bigbang engine compared to the Blitz3d engine ? - The first advantage is Blitzmax vs Blitz3d language - Almost all the commands from blitz3d are wrapped to the Bigbang engine, so a blitz3d code will have 95% (really approximative) chance to be fully translated and capable to run without a modification. The only add : "Import mdt.bigbang" on top to import the module. I'll get back to advantages later, let's talk about "how to" instal and use : - copy/paste the module in the mod dir - create a new bmx file for your project - add <Import mdt.bigbang> - eventually specify the graphics driver if there is stuff that need to be done before the creation of the 3D window > SetGraphicsDriver (BigBangDriver()) That's all, what comes next is what you would have in Blitz3D For example, this is a simple scene of a camera turning around a cube This is the same using bigbang on blitzmax The main difference is the color format > I choosed float [0.0, 1.0] while blitz3d use byte [0,255] The result is not exactly the same because bigbang use per pixel lighting and enable emissive, specular and ambiant for each light. And what makes a big difference : - the framerate. this a simple scene test with the same code as above, just replacing the sphere with : First value is Blitz3D Fps, second is BlitzMax with BigBang Fps * a simple cube (12 tris) : 2000 , 2000 * a 32 segments sphere ( 3968 tris): 1000, 1900 * a 64 segments sphere (16128 tris) : 500, 1850 * a 96 segments sphere (36480 tris) : 275, 1850 So, the BigBang engine does not suffer from large amount of triangles when Blitz3d just decrease as fast as the tris count rise now, what is fun with blitzmax, it's the Object mode. This can replace the fps code, it's faster to write. CreateLight(2).SetDiffuse(1,.8,.6).Move(20,20,-20) this is the light setup. and this is a turnentity in object piv:BEntity = createpivot() ' or BPivot.Create() works too. piv.turn(0,120,0) piv.move(0,2,8) piv.scale(2,1,3) the blitz3d code for this : piv = createpivot() turnentity(piv, 0,120,0) moveentity(piv, 0,2,8) scaleentity(piv, 2,1,3) So, there is many way to code the same thing, it's up to the user to choose the mode that best fit its style. =============================================================================================== About the engine spec : =============================================================================================== =============================================================================================== note : > "Blitz3D-like" = same behavior, and same (or almost) syntax) > "*=" same feature as blitz3d > "*+" addon blitz3d doesn't have > "*-" not implemented (Yet?) =============================================================================================== [Material/Texture] : Blitz3D-like ------------------ *+ native bump map support (just use the Texture Blend mode TEX_BUMP or TEX_BUMPFACE > Bump Face multiply the texture normal with the vertex normal) *+ native specular map support (texture blend TEX_SPEC/TEX_SPECULAR) *+ native displacment map support (TEX_DISPLACE, cumulative with Bump : TEX_DISPLACEBUMP, wich will offset the texture coords for the next layers) *+ native per pixel lighting *+ Frame Buffer support (mesh and textures stored in VRam > faster access) *+ Render to texture using frame buffer [Entity System] : blitz3d-like SceneGraph -------------------- *= nested nodes *= quaternion rotations [Rendering] : Blitz3D-like -------------------- (but the rendered screen doesn't actually totally looks like blitz3d, because of per pixel lighting and some specificity that blitz3d doesn't deal with) *+ post-rendering effect using shader (for outline, glow etc ...) * render can export color (normal rendering) and output on other textures : depth map, and screen-space normals (as seen on the screen above) * multiple wire modes : disabled/enabled/default/backface customizable for each brush (it's not a "full scene wireframe" but it can also be if brush set to default and camera set to wireframe(true) ) > wire backface mode enable to draw front face with fill mode and backface wired. (pretty usefull for editors) [Camera] : Blitz3D-like --------------------- *+ Can render in different ways > camera.RenderEntity(entity, true) = render the entity (and its hierarchy, if recursive=true) from a single camera (you will use this for shadows for example ) > camera.RenderWorld() = render everything for a single camera > RenderWorld () = render everything with every camera > create and render renderlist -> you can manually set what you want to render (in addition to the space optimizer, this is the best way to render very large scenes with large amounts of models and stuff) [Mesh/Surface] : Blitz3D-like -------------------- *+ All surface properties are independant and sharable ! > you can share the vertex coords with an other surface and have a specific vertex normal array for each surface. So you can copy entities (that will share memory for everything) and specify a UV for second layer, so the whole scene can be lightmaped without the needs to duplicate meshes and surfaces. [Collision/Picking] : Blitz3D-like -------------------- *+ You can create your own picking method by extending the default PickingDetector CollisionMethod and/or CollisionResponse Classes (the callbacks used to check and validate a collision and what happens when it happens) So, there is all you need to create a "real" physic engine without too much effort as the main structur is already there. I've not included a physic engine because the more generic, the lower it runs... so with all the tools to build one, it will better fit the scene if you create what you really need, but of course the collisions involved in the engine already works as blitz3d's one (I didn't benchmarked them, but I managed a big scene with thousands of entities moving with collisions with a very decent fps). [Animations] : blitz3d like ------------------ *+ with editable/sharable keymaps |

| ||

| This sounded great Bobsait, until I read this part ;) BTW, is there anybody who used the flat mode ? (except for polymaniacs) It would be interesting to know what isn't there from the B3d command set to know how much effort it might take to convert. |

| ||

| Yep, sorry for that, No offense, I think you're the only one I know that have a use for flat shaded polygons :) GLSL doesn't allows the OpenGL direct mode that enables flat shading. Normals are computed in the shader, not in the opengl states. So, to integrate a flat mode, it would require to "explode" the triangles, ie: 3 vertices per triangle, then set the vertex normals to the triangle normal. (there is aready all the stuff in the engine to make this), it's possible, but not optimized at all since it increase drastically the vertex count, while, in a meantime, the engine can afford a massive amount of vertices, that's not such a big deal too ... An other way to do it, (which would requires a small modification in the source engine, but would be probably the best way) would be to use dFdx / dFdy which should be present in early version of glsl, to override the vertex normal array in the shader if flat mode is specified ... It shouldn't be too hard to add, I think I'll take a look in the evening. ps : @Doggie I can't quite make out if this is a 3d modeler or a game engine... I suppose both. BigBang is the 3D engine for blitzmax (a module to import "Import mdt.bigbang") The software is a modelisation tool made with blitzmax that "uses" the BigBang engine for rendering the 3D. There is no need for the tool to create 3D with the module, as there is no need for the module to use the tool. They are 2 separates products. But, as long as 3D engines require nice polished models to get beautifull games, it's better to have a full compatibility pack :) All the tool supports, the engine is capable of > ie : WYSIWYG (What You See Is What You Get) See : when using 3dsmax + blitz3d, most of the stuff you create in 3ds max is impossible to use in blitz3d, there are only the basic features (material with color and textures, skinned mesh with bones, etc ...) What you see in 3ds max is far from what you get when loading the model in blitz3d. The difference between both makes the workflow really nasty and big waste of time to test colors, camera depth and else... While here, the tool + the engine is the best way to be sure that what you exported will be 100% compatible with your game, and will exactly looks-like what you modelized. About the price : It will probably depends on how many people are ready to pay for it. The more potential users, the lower the price. But I will probably start with something around the 70 euros (85$ or something like this?) for the pack or 40 euros (around 45/50 $) per product > so, you'll earn a bit by buying the 2 at once. But as said, if there is more users, I will lower the price accordingly. Of course, each product can work as standalone > the modelisation tool will produice b3d for blitz3d or irrlicht or any other engine that support b3d While the bmax engine can use standard b3d files coming from Fragmotion, UUnwrap3D, 3ds max (+ b3d pipeline, if you have an old windows xp to run the old 32 bit 3ds max version :/ ) etc ... It can also work whithout b3d as blitz3d does, and build everything from procedural stuff. But that's obvious stuff. |

| ||

| So, to integrate a flat mode, it would require to "explode" the triangles, ie: 3 vertices per triangle, then set the vertex normals to the triangle normal. (there is aready all the stuff in the engine to make this), it's possible, but not optimized at all since it increase drastically the vertex count, while, in a meantime, the engine can afford a massive amount of vertices, that's not such a big deal too ... In order to get proper flat shading in B3d you need to unweld all the triangles and calculate per triangle normals anyway. All my models are set up this way. I'm pretty sure I don't even use Fx 4 (flatshading) at all so it will probably work as expected. I'll wait to see what the finished command set will be but this looks like excellent work. |

| ||

| @Stevie G: I didn't mention it, but you can also texture the mesh with a bump map, so it will replace the vertex normal or mulltiply it. (in multiply mode, with some flat shaded style normal map, it could be pretty nice actually) |

| ||

| Will it do shadows or post processing effects? |

| ||

| Also do you include ready to use shaders or code examples to do effects like per pixel lighting, per pixel shadows, bumpmapping, per pixel glow, reflections (cubemapping ?) Also you have not talked about terrains, can you please give us some infos ? Also about the support, do you plan to do it long term, even if you are not paid ? This is a big issue with many game engines, where the creator either disappear or simply has not the skills to fix the bugs/compatibility problems or the willingness to do it after some time (take a look at what happened with xors3d or nuclear basic...) |

| ||

| A good point from RemiD. If it were me I would make sure my users were supported and happy - because I've been let down in the past myself. |

| ||

| +2. Nonetheless still quite excited by this announcement. I've been getting the programming itch again lately. |

| ||

| @KronosUK * Shadows are not included for a simple reason : for me, shadows are like physics engine, it's a third party stuff that can't be generic enough to work anytime with any scenes and won't be efficient if not adapted to the game/application. But, I will provide a library (for free) as extension to the 3d module to generate realtime shadow (maybe cascaded shadow) There is already a sdk to create post-processing effect (like the outline on the screens above, it's a full screen post-process) @RemiD : per pixel lighting does need nothing, it's incorporated to the engine as a replacement for the lighting. Just create a light (like you do it in blitz3d), when rendering (using renderworld or renderentity etc...) it will compute the lighting on the fragment shader. So there is no hard work to do to get them working :) for the bump, I have made some samples for some scene. you still have to know that, the bump is not a hard stuff in the engine (so as the specular map)

Local NormalTex = LoadTexture("My Normal Texture File.png")

TextureBlend tex, BLEND_BUMPFACE

Local SpecTex = LoadTexture("My Specular Texture File.png")

TextureBlend tex, BLEND_SPECULAR

EntityTexture MyEntity, NormalTex,0

EntityTexture MyEntity, SpecTex,1

Then render the scene, it will bump the light and use specular map for shininess Also you have not talked about terrains, can you please give us some infos ? Ok, that's the deal of the moment : I didn't make any terrain engine. I have never used terrain with blitz3d except for some demos ... I will ! I already have an idea of the technology I'm going to use More info to come ... Also about the support, do you plan to do it long term, even if you are not paid ? This is a big issue with many game engines, where the creator either disappear or simply has not the skills to fix the bugs/compatibility problems or the willingness to do it after some time (take a look at what happened with xors3d or nuclear basic...) This is the engine I use, it has already fully replaced the blitz3d engine on my computer, so yep, it 's a long term engine that I will push up to the limit until glsl 3.3 will be obsolete as directx 7 engines are, but at this moment, i will update it so it's still up to date with new technologies. At the start, it was an android engine I made (as android 4 and higher only suppport glsl version, I had to create a shader based engine), I made a portage on blitzmax, and I must admit I've already had some much fun with it that I have left the android version (I will publish it when i will figure how to create android project with the bmxng) In the end, it's already been 4 years I'm working on it, it is in beta version since half a year, still adding stuff to make it more and more complete ... ps : I'm curious to know why nobody asked something like "Why using your engine instead of minib3d ?" Happy I have not to anwer that :) |

| ||

| Thank you for your answer. I can live without a dedicated terrain function as long as I can build my own meshes through the engine. CreateMesh/CreateSurface etc. Hopefully you can/will release a list of the full command set at some point. |

| ||

| This is the engine I use, it has already fully replaced the blitz3d engine on my computer, so yep, it 's a long term engine This is a good thing. I'm curious to know why nobody asked something like "Why using your engine instead of minib3d ? The answer for me is simple : i am ready to pay a reasonable fee to not have to think about fixing bugs/compatibility problems. The time you spend doing something you cannot spend it doing another thing. minib3d seems functional, and openb3d seems functional too, but the programmers who currently fix/improve the engine may not be here forever, and i don't really want to do it. So if somebody can do it, good for me. The other thing is that i know that you have used Blitz3d since at least 2005 (if i remember correctly), that you have a good level, and you seem to still enjoy making video games after many years when others have stopped and disappeared, so i think that this is a good sign. My suggestion to you would be to provide for free a trial with a time limit, so that we can try it. (but limit the time an executable can run at 5 minutes or less so that it incites users to buy it.) As you want. |

| ||

| The command list will be on the site mdt.bigbang.free.fr (for now, until I migrate to a "real" server) as the online documentation. For now, it would be really faster to list the unsupported command :) BTW, I didn't mention it : it works perfectly with max2d as the BigBangDriver is an extension of TMax2DDriver with all the overriden methods working. It also works perfectly on a maxgui canvas (as you can see from the screen, of the modelisation tool), it uses shaders as well to render the 2D. I didn't benchmarked the 2d module, but it's pretty fast too. Maybe I should compare it to the glmax2d one, there could be some surprise |

| ||

| I think you've done a good job. I won't use it since I don't use blitzmax yet and am not really involved in as much 3d gamedev as I used to be but it looks good. |

| ||

| Nice ! I could be interested too if the synthax is near blitz3d-like. Did you implement mesh creation commands like blitz3d ? About the modeling software, does it handle polygon extrusion, vertices moves and smooth groups like 3ds max ? Also, will you provide a limited demo version so we can test it ? |

| ||

| mmm.... 1/ Yes 2/ Yes 3/ Yes :) |

| ||

| Quite interresting, especially if it works with MAX2D. Can 2D be rendered 'behind' the 3D ? |

| ||

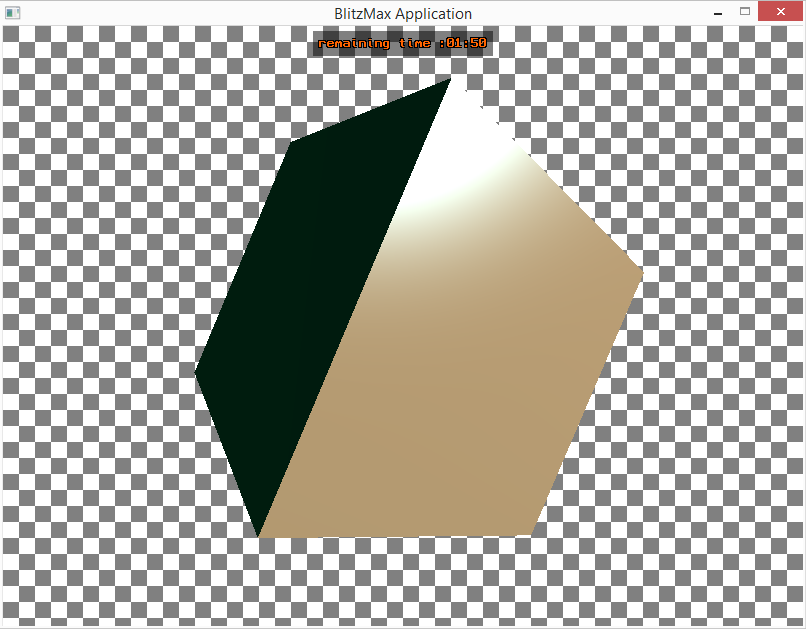

| what do you mean by "behind the 3D" ? This produice, this :  |

| ||

| Hi, this is a video game engine ?, sorry but my English is very bad and the translator does not help much . |

| ||

| Yes, game engine, based on Blitz 3D - but for Blitz Max. |

| ||

| It's not a "game" engine, but a "Graphics" engine. It provides a SDK for creating and rendering 3D Game engines are more "high level" and build on top of 3D engines. BigBang is more like miniB3D. You can use it to create a game engine, like you would use Blitz3D, and then, a game. Just imagine you have from low-level to high level : Graphics hardware - 3D API (OpenGL/Direct X) -> provide interfaces to manage the hardware - Graphic engine -> provide interfaces to create complex stuff like converting data to models/textures and interface the rendering - Game Engine -> provide interfaces to the game developper to create specific stuff like behaviors for IA, physics etc ... most of what will transit next will be data as scripted code / xml / lua etc ... - Game -> provide interfaces to the user (the gamer) It feeds the game engine with data like worldmaps / xml / User interface / etc ... so the player ... can play BigBang is the 3D Graphics Engine Then, the modelisation is a modelisation tool, it's not an engine, it helps to create models. It's not part of the Bigbang module, it just contributes to make it more complete So the whole pack (module + tool) can look-like a "suit" for creating a game engine :) |

| ||

| ..interesting movement in BMX 3D area..i like it..so, when this BigBang is going to be available for some tests? |

| ||

| A demo will come in the week (so you can try something done with the engine and test if it works for anyone <anyone who match the minimum configuration>) In the same time, I'm building the doc for the engine, when done, I'll compile the sources for windows with some restriction (mac will come later, as I don't own a mac for the moment, and linux version will come as soon as I've installed it) The restrictions will be : 1 > a small cinematic showing "powered by bigbang" (probably with a "demo" mention, it should be 5 to 10 sec max) whenever you launch the graphicsmode (then nothing, I hate watermarks) the cinematic will appear only in Release mode (debug mode won't show it unless you ask for it > because when we want to code something even with a demo version, we don't want to see a logo again ang again and again ...) 2 > When you run a program, a counter/timer on renderworld will increase until 120 seconds and/or 120000 loop, maybe less ... then when one or the other counter has reached the max, renderworld will be skiped So you'll can test your stuff for 2 minutes then you'll have to deal without the 3D. I think in the week the demo version could be released as a compiled binary module (without sources). The engine will be for sell when I'll get all the extra stuff done, and when I'll made any modification asked while testing the demo version (it will be kind of the "beta test"). The release data will be announced as soon as : - doc is complete - samples available for extra stuff - support site ready (for bug repport etc ...) - payment method validate ( > I'm thinking about paypal) ------------------------------------------------------------------------------------------------------------- Then, as the modelisation software doesn't seem to be the point of interest, I will wait a bit for it. I just think I'll make a pack for the 2 products when they'll be both ready for release License will be like this : - buy one or the other product (3d engine module and/or software) - buy the other later : you'll get it at a reduiced price (if you still have a valid license key of the other product) So, people who wanted to buy the engine while the software was not for sell won't regret it. ------------------------------------------------------------------------------------------------------------- Now, enough talking about money, let's talk about time line : - For now, I'm on the Doc and a demo (not very nice, I'm not a very good designer, but showing all possible stuff) - next week (somewhere between the 15 and the 20 of february) I'll release the closed-sources-compiled module of the restricted demo ( It doesn't sound very well said like this :D ) - for the two next week, I'll make the needs for the payment site and all the required stuff for getting a "real" release version of the module And from the demo release to the <release> release, it will be the beta-test time. So, you'll be invited to test it the most hardcore you can ;) |

| ||

| @Bobysait>>I can provide a fully functional and recent code for Paypal (receive IPN, make sure IPN is valid, analyze IPN, depending on transaction state and if the datas are valid and not duplicate, automatically send email to seller (with infos about the transaction) and to buyer (with receipt + instructions to download and use the digital product) I am ready to trade it for a reduction (for me) of the price of your 3d engine :D Let me know if you are interested. (my email address is in my blitzbasic profil) |

| ||

| I sent you a mail, see you on facebook, we'll talk about this. |

| ||

| @Bobysait>>I don't use facebook, we can use skype to talk about this, i sent you an email with my skype pseudo... |

| ||

| @RemiD -> I don't use Skype ... I use Facebook ^_^ |

| ||

| :) |

| ||

| Then, as the modelisation software doesn't seem to be the point of interest, I will wait a bit for it. For me it's almost as interesting as the engine so your idea about buying one product after another with no extra cost compared to the full pack is fine. I learned 3D with 3ds max but I don't own a license, I tried to switch to blender but i find it counterintuitive and can't do anything. So an alternative to 3ds max would be nice, since I don't need all the advanced functionnalities, just the modeling ones (ok, maybe it would be nice to have CSG but I guess it's not on your todo list :). Will it be possible to export a model to .3ds or .obj ? And is there an UV mapper ?Anyway, I can't wait to test the engine demo. |

| ||

| Bobysait, what did you use to write the Modelisation Program? |

| ||

| @Flanker : There will be a UV mapper, at the moment, it's still in a very early stage. coordinates can be edited by hand, one vertex at a time ... boring and long :) I will add multi-selection and some functions, like scale, rotate etc ... for the export format, there is only import/export as b3d (and the internal format, that use polygon instead of triangle -> for surface extrusion and smoothing normals) I will add some more export (like obj and 3ds) later, as they are not fully supported by blitz3d, 3ds, obj, etc ... and just convenience format for importing old stuff from another software that don't support b3d. Once you have them in Cosmos (Oh, yeah, that's the modelisation tool name for the moment ...) you'll only deal with b3d. So, to be true, it's not on my plan to deal other formats. considering CSG, boolean operations could be added, but I don't know any software that doesn't do it messy as hell. So, as the rest, it will be part of an upgrade/update later. @Stevie Elliott : The whole software is made with blitzmax. Maxgui + the 3D Engine + some extra blitzmax stuff on my own. |

| ||

| Boby, I meant rendering a 2D background (bitmap) then rendering a 3D scene on it and of course another 2D overlay. |

| ||

| @Bobysait>>Can you explain briefly how we can draw 2d stuff (text, plot, lines, rect, images) by using Blitzmax + your 3d engine ? And if we can use different buffers like in Blitz3d ? for example, select a buffer (imagebuffer, texturebuffer, backbuffer), and draw only on this buffer, and then use copyrect to copy an area of a buffer to another buffer (like in Blitz3d), and then display the result on screen ? |

| ||

| ..this is very exciting..so, source code for 3D renderer part will not be available? Im asking simply, in case that official support is no longer available (many times happen before).. |

| ||

| Just a bit of remixing in the code and the engine should be ready for a test release in demo mode : 2 minutes rendering max or 7200 loops (60 loops per second for 120 seconds) @Pingus : Mixing 2D, image or anything else works the same here a sample of image drawn before the renderworld (a TImage object as background) and some 2D after (a rect and some outlined text) As mentioned, you just need to remove the Clear_Color parameter from the clsmode > it works the same as Blitz3D, you can mix 2D and 3D if you remove the cls_color with CameraClsMode(camera, false, true) This is how it looks.  @RemiD : Just like you would do it with blitz3D but with the max2d stuff. so you use DrawImage/DrawRect/DrawPoly etc ... before or after the renderworld (according to the clscolormode) And if we can use different buffers like in Blitz3d ? for example, select a buffer (imagebuffer, texturebuffer, backbuffer), and draw only on this buffer, and then use copyrect to copy an area of a buffer to another buffer (like in Blitz3d), and then display the result on screen ? Actually, buffers in BigBang doesn't really work as blitz3d's ones As the technology is not the same, the behovior isn't ever. First, don't expect to copyrect to an image with blitzmax, there is no stuff I know that can allow that (not with direct functions) You can copy pixels from the pixmap of the image, but it's long and not accurate. All this stuff is pixel manipulation, as the engine let you return the pixmap of a texture and a framebuffer, you can make what you want with the pixmap. Just to notive : I don't think it will be fast. Whatever, The engine allows to render to a buffer directly, but a buffer is not a texture, it contains a texture (that you can access via getters) I will provide both sample and wrapper to simplify this, as At The Moment, it's not fully "user-friendly". for example, this is an how-to render to an offscreen buffer and texture a cube with the result note that the BufferTexture function has not been wrapped for the moment, so it needs to be accessed in object mode > BufferTexture:BTexture(Buffer:BBuffer) Buffer.getTexture(GL_COLOR_ATTACHMENT0); It's not "that" awfull, but it's not the most user-friendly way :) That's how it looks  |

| ||

| ..also, i would suggest to make it 5 minutes limiter...2 minutes is somewhat too short.. |

| ||

| 5 minutes shouldn't be a problem ;) |

| ||

| Old style shadow map > + render the screen from above with fog enabled + texture the plane with the texture (this is definitely not the good way to make shadows, but ... it still works for simple scene)   |

| ||

| About buffers, if it is not like with Blitz3d, is it at least possible to write/read on a texture fast enough (like with Blitz3d buffer system) and to copyrect a part (or the whole) of a texture (or of what is displayed on the screen after renderworld) to another texture ? This can be useful to create procedural textures for screens, windows, mirrors, premade cubemaps... (about your shadows, the light must no go through the casters... and it seems that the shadows are not applied on others casters...) |

| ||

| the shadows are only projected on the plane, so it's all but accurate :) It was just trying to show a fast render2texture sample. @About buffers : As I said, it's not the same technology. With the engine, you can set the texture of the buffer being rendered. So whatever you want to write on a texture you can : 1/ use the texture's pixmap Local pix=Texture.lock() ' -> make what you want from the pixmap like you would with a LockImage() ' [...] Texture.unlock() ' to refresh the texture pixmap 2/ render directly what you want '- initialization - '> Create or Load a texture Local mytex:BTexture = LoadTexture(file)/CreateTexture(width, height ... > any texture ... '> Create a buffer myBuffer = create3Dbuffer(width,height, mytex) '(or use the existing one, if your working with the multirenderer) ' myBuffer = MultiRenderer().renderBuffer() Repeat ' [...] '- rendering - '> before rendering, set the buffer to use BRenderer.set(myBuffer) '> render the stuff to be drawn on the texture RenderWorld() [...] the texture is now updated with what the camera sees. (it's sort of blitz3d-like's SetBuffer method in the syntax whatever it's absolutely not the same thing, and as far as setbuffer does not work for render to texture (in the original blitz3d version)) Then I repeat it : if you want to copyrect, use the pixmap and probably something like GrabPixmap or use per pixel copy/write You can do it, blitzmax have no "CopyRect" function but you can make your own easily |

| ||

| Unoptimized shadowmap using depth comparison > require 2 render (one in lightspace one in camera space) here the shadowmap resolution is 2048*2048 for a 1920*1200 screen runs at 250 fps, but the scene is not complexe. Also, the bias used is far from perfect, we can see some artifacts But whatever, it works, and that's the first time I implement a shadow shader (fun to say), so I'm a bit proud to make it working with very experimental stuff :)  BTW, it's coded using a Post-process FX, so, once it's done, it works like a small library and is easy to setup I show you the full source of the fx, like this you'll have a view on how it is setup. (note that the shader sources are hard coded, but it can be load from file, whatever I always prefer making them in full code, using const string, it prevent naming issues) Then this is the initialization ' this effect requires the multi-renderer (that export both color, depth and normals on a single pass) ' the light rendering could be done with a different Renderer that I removed long time ago ... ' maybe I should redo it as it exports the depth only MultiRenderer().enableDepthOut() MultiRenderer().enableNormalOut() ' create 2 buffers for depth from light source and main camera source Local mainBuffer:BBuffer = MultiRenderer().CreateBuffer( shadowsize,shadowsize ); Local shadowbuffer:BBuffer = MultiRenderer().CreateBuffer( shadowsize,shadowsize ); ' create the fx post-process (set the first texture as the depth layer from the mainBuffer) ' 3D Buffers are like this : layer0 = color (normal render), layer1 = depth, layer2 = screenspace normal Local fx:TFxShadow = TFxShadow.Create ( BufferTexture(mainBuffer,1), shadowsize,shadowsize ); ' set the second texture as the shadow buffer depth map fx.setTexture( BufferTexture(shadowBuffer,1), 1, shadowsize,shadowsize ); Then, create 2 cameras and set them for the fx ' 0.7 power > dark shadows (0.0 is no shadow visible, 1.0 is full black) ' 0.0002 > bias for depth test fx.setParams(0.7,.0002,cam, camlit); and in the loop ' set multirenderer to use the main buffer MultiRenderer().Set(mainBuffer); ' render the scene on the offscreen framebuffer cam.render(); ' set the multirenderer to use the shadow buffer MultiRenderer().Set(shadowbuffer); ' render from light source camlit.render(); ' apply the shadow Fx (compute the 2 depthmaps to extract the shadows) fx.render() ' return to backbuffer SetBuffer BackBuffer(); ' draw the main rendered scene (the first texture from the frame buffer) ' I used a rect texture but it also works with a non pow2 texture local vh:int = shadowsize * (Float(GraphicsHeight()) / GraphicsWidth()); drawTextureRect BufferTexture(mainBuffer,0), 0,0,shadowsize,vh, 0,0,GraphicsWidth(),GraphicsHeight() ' draw the shadowmap on top with shade mode, so it only draws darkened areas SetBlend SHADEBLEND drawTextureRect fx.getOutputTexture(), 0,0,shadowsize,vh, 0,0,GraphicsWidth(),GraphicsHeight() And here it is. So, to resume : - init by creating 2 buffers and the fx + set the fx - render using multirenderer - draw. |

| ||

| Nice one, looks good ! Performances seems quite nice for 2048x2048. How does the scene complexity affect the shadows ? Because of the two rendering ? What's your setup, graphics card ? |

| ||

| 2 renders on 2048*2048 textures, yep, more entities to render will affect the framerate so as the textures on the scene, not that much actually but, the scene on the previous screenshot is so low in poly that it's not a good sample for framerate it also has no textures at all no animation and no collision. Next screen will show a more standard scene -My setup - * CPU 6 core 3.1Ghz * Radeon HD 7980 * 8 Go ram 1333 MHz * Windows 8.1 So, it's far from the best, but it's certainly farther from the worst. Good for met, not very good for testing performance will low machines |

| ||

| This looks better indeed, however it seems that there are some inaccuracies (for example, the shadow at the center does not seem to be applied on the cube) Can you show the same scene but with walls and a ceiling ? (like a room) What happens when there are multiple olights and the lighting of one olight lights the shadow casted by another olight ? About the complexity of the scene, it is always possible to create low details casters... Also what happens if a surface use transparent texels and opaque texels like for the smallbranches/leaves of a tree or the texelsshape of a particle ? With stencil shadows it is not possible to cast such a shadow (only the shape of the triangles/surfaces are considered) but with your system i suppose it is possible ? |

| ||

| The shadow system here use the depth which take care of the masked pixels (no alpha ! -> we can't store alpha in a depth buffer) So, it should be able to cast a tree. A light can't "hightlight" a shadow as it's computed "after", but if I defer the lighting pass it could happen ... but I won't, because it would involve too much work. I'm just trying to make a minimalist sdk for now, not a "big shadow library" :) Whatever, as it's shader based, all we want to do should be possible if we export what we need and know how to use it. BTW, the light is "omni" but the shadow rendered is on the camera frustum only. So in a room it will not cast on all walls until we make a cubemap process-like to render all 6 view from the light view. Once again, it's possible ... but too much work for a demo. It will probably (certainly) come later. some inaccuracies (for example, the shadow at the center does not seem to be applied on the cube) yep, as mentioned, the bias is not perfectly setted up, so there are some offset which include a gap between entities and the projection. I already fixed that on the current version I'm working on (with an animated entity, it works ok by the way, I'll add a tree to cast shadows from the leaves, just for the purpose) |

| ||

| This looks nice, i am curious to see your next demo. |

| ||

| BTW, for coding my shaders, I use notepad++ with a <user defined language> specific for glsl + my engine variables (I actually added some stuff to a glsl setup I found ... I don't remember where) you can get it here (save it, don't open it ... or copy paste it ... well, it's up to you) > http://mdt.bigbang.free.fr/bigbang/notepad_glsl_bigbang.xml That's what it looks like :  The <attributes> </attributes> <main> etc ... are extra codes parsed when the shader is loaded, it is extracted then as variables which are then used to build the shader and Attribs are automatically collected. So as the functions that are re-interpreted as BScriptFunction the <fx.stuff> are pseudo-internal variables from the BFxProgram, it allows to access data from the super.shader (like textures, pixelsize, uv etc ...) <bb.depth> is a function that reinterpret a depthmap exported from the rendering, it is defined in the core of the engine. (as it's a special format encoding > depth to color, it requires a specific function to rebuild the depth from the color) |

| ||

| I use notepad++ with a <user defined language> notepad++ is very good :) |

| ||

| Well, I guess I have to ask it, so here is my question: why someone should use your engine instead of OpenB3D? OpenB3D already provides Blitz3d like features, including quaternion rotations (not available in minib3d); and it also provides shaders, like your engine. Plus, OpenB3D has some features that you said your engine lacks, like: - terrains - shadows - CSG - particle system - support for .3ds, .x and .md2 files Last but not least, OpenB3D is 100% free, your engine will be more expensive. And the full source code of OpenB3D is available, so there will always be someone able to fix/improve it (this is in reply to RemiD) Oh, if anybody wants to try it, he won't suffer the 2 minutes limit, he can test for two minutes as well as for two weeks. |

| ||

| @angros47>>also i realized that minib3d and openb3d are more than only a 3d graphics engine, so in a way they are more complete than this. But Bobysait seems to create this 3d graphics engine for him anyway, so if he decides to make it accessible and some decide to use it, why not. More choices of functional and stable 2d 3d graphics engines with a blitz3d syntax is beneficial for every coder who like blitz3d programming style, so it's all good ! (i will probably take a look and try blitzmax+minib3d and freebasic+openb3d in the future, but for now blitz3d still works, so i will continue to use it until its last breath ! thanks for what you do on your side :) ) |

| ||

| @Angros : When you won't seem to be sarcastic as hell, maybe you'll get some answers. |

| ||

| @Bobysait: I notice at the start of this topic you mention "flat mode", is that like a kind of make 3D look like 2D thing such as cartoonify sort of thing?. |

| ||

| ..where to get that OpenB3D thing, and is it working with BMax ?? |

| ||

| @Naughty Alien http://aros-exec.org/modules/newbb/viewtopic.php?post_id=79976 It seems to be a minib3d portation to FreeBasic with several added functions + shader + shadow + physics etc. made by angros47 Interesting project (thumbs up). And still supported! http://www.proog.de/home/freebasic/tools-libs/27-3d-programmierung-mit-openb3d-und-freebasic http://freebasic.net/forum/viewtopic.php?t=15409&postdays=0&postorder=asc&start=0&sid=a24f7f2664ee3fe94501e44716cea02f V1.1 Added: -3d actions: ActMoveBy, ActTurnBy, ActMoveTo, ActTurnTo, and many more -Physics features: CreateConstraints and CreateRigidBody; with the last one, it's possible to define position, scale and rotation of an entity by linking it to four other entities (usually pivots): if that entities are controlled by constraints, the main entity can be controlled in a physically realistic way -Particle system: emitters can be made with CreateEmitter (even an "emitter of emitters" is possible) -if a light is created with a negative light type, it won't cast shadows -DepthBufferToTex: with no args it uses the back buffer, otherwise it can render the depth buffer from a camera (like in CameraToTex) ... plus many more |

| ||

| @Naughty Alien The official OpenB3d site is: https://sourceforge.net/projects/minib3d/ The site with the wrapper for BlitzMax is: https://github.com/markcwm/openb3d.mod The official thread for the BlitzMax version is: http://www.blitzbasic.com/Community/posts.php?topic=102556 http://www.blitzbasic.com/Community/posts.php?topic=103682 http://www.blitzbasic.com/Community/posts.php?topic=105082 @Bobysait Why do you call me "sarcastic"? You were the one who asked for it: ps : I'm curious to know why nobody asked something like "Why using your engine instead of minib3d ? |

| ||

| "Oh, if anybody wants to try it, he won't suffer the 2 minutes limit, he can test for two minutes as well as for two weeks. " Seriously, I think I deserve a little more respect than that. Now, the way I want to distribute my engine is not of your buisness, and you're not allowed to comment on it. And, "Damned !" I won't let you post links to your engine. If you want infos about what I have to sell, ask for it, but here, you're just like on the Porsche stand, trying to sell your Ferrari stuff ... Do I really need to explain why you 're really impolite here ? |

| ||

| you're just like on the Porsche stand, trying to sell your Ferrari stuff LOL That's one way to look at it I suppose! :D I think this has all spiralled into a whirlwind. I see Bobysait's point and I see angros47's point but although OpenB3D may be doing the same as Baobysait's engine, maybe his engine could be easier to use in a wrapper kind of sense. May be a good idea to compare them and weigh-up the pros and cons. ;) |

| ||

| Actually, it's like we both are on a VW stand (since this is Blitz3D forum, not your engine forum), and you try to sell Porsche stuff, while I promote Ferrari stuff. On YOUR forum, you can forbid me to post link to my engine, but this is not your forum. You are free to post link to your engine in OpenB3D thread, as i can post link to my engine in your thread. You are the one using somebody's else forum to promote a commercial product. Anyway, I won't post more link in this thread, if you want. I actually want an info: you said your product has many advantages over Blitz3D (first of all, BlitzMax language with OOP); I am just asking which advantages does it have over OpenB3D and miniB3D. |

| ||

| maybe his engine could be easier to use in a wrapper kind of sense. May be a good idea to compare them and weigh-up the pros and cons People will compare this engine to others later for sure, but most of the engines available are like modelisation softwares : You can pay a lot or nothing, in the end it's just a matter of feeling when in use. I made the engine to work in many ways (in object or not) and the commands easy to use (I must admit, it is in part due to blitz3d commands set that is IMHO the best one we can have for 3D manipulation). While it's better for prototyping, it could be a bad thing for large stuff like real game engine ... The engine solves this by letting everything (or almost) accessible to the user We have abilities to fit the engine to our needs and make it usefull for more professional stuff. A non-exaustive list of extra stuff you can acheive easily, that lacks in blitz3d/minib3d and makes the engine ready for big projects : 1/ Surfaces are defined by Vertex and triangle "arrays" that are not associated to the surface but independant objects (I said arrays for convenience, while they are real object that automatically update data on the fly and send internal arrays to CG or store data on CG memory for best and faster access) - A surface can be used the blitz3d-like way, using classic commands like "AddVertex" "VertexNx" "VertexNormal(surf, index ...)" etc ... - they can also be accessed independantly as object surface.GetCoords() / surface.GetTriangles() / surface.GetNormals() / surface.GetTangent() etc ... (there is also a basic command for each accessor GetSurfaceCoords(surface) ... etc ... if you want to program without object) -> each array in a surface is accessible and sharable independantly. There is so many ways to use this feature that I will just list the most relevant coming to my mind : You can share vertex coords from a surface to another surface Surface1 = createsurface(mesh) addvertex (surface1, x,y,z, u,v) vertexnormal(surface1, nx,ny,nz) [...] addtriangle ... Surface2 = createsurface(mesh2) addSurfaceCoords(surface2, GetSurfaceCoords(surface1)) ' add the vertex coords from surface1 to surface2 addSurfaceNormals(surface2, GetSurfaceNormals(surface1)) ' add the vertex normals from surface1 to surface2 [...] ' do not add UV Set 1 This will link the coords/normals etc ... from surface2 to surface1 object except for the UV Set 1, it won't duplicate any vertex. Then you create a specific UV set (coord set 1 > for Lightmaping) for each surface that won't be shared between surfaces VertexTexCoords(surface1, 0, tex1_u0,tex1_v0, 1) VertexTexCoords(surface1, 1, tex1_u1,tex1_v1, 1) VertexTexCoords(surface1, 2, tex1_u2,tex1_v2, 1) ... VertexTexCoords(surface2, 0, tex2_u0,tex2_v0, 1) VertexTexCoords(surface2, 1, tex2_u1,tex2_v1, 1) VertexTexCoords(surface2, 2, tex2_u2,tex2_v2, 1) ... So as each each surface has its own UV set. Now, you can lightmap all meshes while not consuming extra memory just to store all duplicate vertex data. 2/ renderers are customizables you can extend a renderer to manipulate what you need (the manner the brushes are rendered, or textures blended or ...) This feature is probably useless for many users, because the standard renderers available are pretty complete There is 3 main renderers available (there will be 5 in the end) -> one that only renders geometry (without depth, normal, texture, lights etc ...) which can for example generate masks for entities if you want to make some glow in post-fx for example ... or can be a base to extend, to add alpha mask or depth only ... or what you need. -> one Albedo renderer (the "standard" renderer that renders geometry with normals, depth, textures, bump, lighting etc ...) -> one with multiple output renderer (albedo, depth and normal exported to separate textures) the two others to come are for faster access to a single layer : -> depth only -> normal only those two renderers can be usefull to render only normals and/or depth in light view for example (requires for many post-process shaders) And, to simplify all, switching from a renderer to another is as easy as setting the current buffer ' set the multi-output renderer as the current renderer SetRenderer (MultiRenderer()) ' or in object MultiRenderer().Set() ' or use the global renderer as the current renderer SetRenderer (GlobalRenderer()) ' ... in object GlobalRenderer().set() ... etc ... Then, you can render what you want. You don't need to hide/show stuff to render separate list of entities, the engine allows to render an entity and its hierarchy directly RenderEntity (camera, Entity) will render the entity using the specified camera (recursive hierarchy of the entity is optional [true by default]) RenderCamera (camera) will render the full scene like a renderworld but only with the specified camera RenderWorld() render everything with all cameras (= the blitz3d like command) You can also define lists to render AddRenderList(camera, Entity) > add the entity to the camera renderlist (recursive hierarchy of the entity is optional [true by default]) RenderList(camera) > renders all entities in the renderlist of the camera All those commands also exists in object camera.render([optional entity]) camera.renderList() Camera.addRenderList(entity[,recursive]) etc... 3/ main collsions are accessible and extendable, you're not limited to the sphere vs sphere/polygon/box method, you can add many collision style, collision type and collision response as you need. it returns then a simple Integer for each collision type -> as blitz3d : 1 for elips/elips, 2 for elips/polygon etc ... you'll have more collision type available, and setting an entity is as easy as EntityType( entity, MyTypeID) 4/ the engine gives a special type for post-Fx shader based. post-Fx are for 2D compositing and extra effetcs (for example, screenspace normals and depth map exported with the multi-output renderer can be used to create an outline around entities, or a glowing effect) Of course, I can't provide a specific post-Fx for all effects because there are tons of them, and most of the time we're looking for something particular, but I provide some ready to use usefull effects and a SDK to create your own post-fx easily. Just to notice, for the second shadow screen above, it took me at most 10 minutes to create the shader and render my first fully working scene from scratch. All I had to do was : - create a camera for the light rendering - render the scene in both main camera and light camera view - create a postFx extends with a small shader source that takes So, for the moment, I still have to finish it, and things will get clearer when it's done and ready for tests :) But for a first release it should be already pretty consistent and I don't feel the needs to legitimate the existance and the price of the engine. I let the choise to the user to decide if it's what he is looking for or not. I hope this will clarify some questions. Cordially. |

| ||

| @Angros : Whatever you think, I'm not here to come into a war against you, but you're off-topic. This is not my forum (as you mentioned, but I never pretend it to be), it's still my topic, and it is related to Bigbang engine, not openB3d. I don't want to be agressive so could we just stop with that ? Then to answer : > I won't give the advantages vs openB3d. I just give the features of my engine. First : It's not an engine that enter into competition with an open-source one. Then : I have never used OpenB3D, so I don't even know what it contains and I can't compare. For the miniB3d part, all I know about, it's that, whatever it is a good community project, there are bugs that have never been solved, and there are differences in transformation with blitz3d that I can't accept as an engine I would use (that just is a problem for me, it doesn't have to be a problem to anyone else ... but it might) And as experience, I have already asked about broken stuff in minib3d, I have never got any answers. I didn't expect to get answered that much, and I don't blame authors for not answering, but it just tells me that an open source project is just an open source project. It's nice to share and expand, but when you need something done, you'll better do it yourself many times, and as soon as an update comes you'll have to make all the hotfix you add. It's a lot of constraints which happens also with most github's projects that I don't want to have to deal with and it's generally worst with github forked sources when updates are rarely updated as the original source, so you spend time to switch between versions. Then, as mentioned in previous post, I don't need to explain why someone should use a shareware engine instead of an open source one, because, it's a big discussion that is not related to the fact I sell something and it's a too large discussion to be abord here And anyway, it's just not the way I work, so as many people. And I don't expect nor encourage open-source guys to understand or accept that. It's just my opinion. So, all you'll have here, is what the engine is capable of, not what it is capable of "against another engine". |

| ||

| Thank you for the infos. |

| ||

| @BobySait I like the name 'BigBang'. I'll await to see some demos of the engine and of the 3d-editor. Just to clarify, I don't want to see the 'classic' teapot :), I would like to see a 'complex' scene (shadow, shaders etc) to have an idea of the potential/speed etc of the engine itself. A question: the editor will export 'scenes' to use in the engine, directly or via some sort of ready-to-use functions? |

| ||

| A question: the editor will export 'scenes' to use in the engine, directly or via some sort of ready-to-use functions? Both engine and modelisation software are made as standalone, so I didn't do anything in this way, but it shouldn't be a problem. The format of the b3d in output is already an extension of the standard b3d, with many chunks (like "LITE" for lights "CAMR" for cameras or "COLL" for collisions/pickmodes etc ... which are already implemented in the b3d loader, so it recreates the scene "as is" in the modelisation software and vice-versa) BTW : Loading the exported b3d in blitz3d will just ignore the extra unsupported chunks, so the scene will be loaded without lights, cameras etc ... but will works as a legacy b3d would. Then, all entities are accessible via FindChild (by name) or GetChild (by ID), so a scene should be easy to extract from the b3d. Whatever, you're right, it would be a good thing to add a specific scene manager, and I will implement it later, so it could for example manage scripted stuff (like textures animations or else) For the demo sample, I 'm creating a scene big enough to have lots of entities, textures, animations, collisions and effects, so it will be easy to see and compute parameters to check what runs well and what doesn't Obsviously, We can't build an engine that will render extreme graphics with low-config ... I did my best to optimize the engine, but as long as there is no magic involved, it will still be limited to the hardware running it :) |

| ||

| Personally I do find it really annoying when somebody is determined to do well and charge some money for their hard work - but then somebody else suggests a free piece of software, that might, or might not be supported, and contain some bugs. But hey, it's free! But the opposition is putting in some professionalism and support. True, this site belongs to neither of you, but why hijack anothers thread? Just seems like sabbotage. |

| ||

| Wow, looks as though you've worked hard on this one. Looking really good bobysait, keep at it mate... |

| ||

| @Bobysait Thank you for the info about the 'extended' B3D model. An interesting approach for saving/loading 'world/scene'. |

| ||

| This looks a promising engine Bobysait, I hope you do well with it, you've obviously worked really hard at it. Your competing with Leadwerks more than Openb3d, the advantage I see here is users can use Bmx instead of Lua. You will need to wrap Newton or some other physics engine of course. I have a suggestion, if you kept a core module of the engine closed source (like Bmx did with bcc) then open source the rest that would be more appealing for some people. |

| ||

| I agree with munch..ill be more than happy to go back and mess around with Bmax , if relatively decent 3D engine exists for it..now i started messing around this OpenB3D thing, but i wouldnt mind to pay for decent, nice 3D engine for BMax..and i hope you will use Bullet instead of Newton.. |

| ||

| +1 for Bullet physics engine. |

| ||

| I hope I will too :) bullet is pretty good While it supports a lot of triangles/vertices, I broke something in the matrix system (I used a "do not update if not required" that I switched to "update anytime something move") and the framerate fall down very fast on huge scenes. I need to fix this ! While in the meantime, scenes will large amount of surfaces per mesh renders pretty fast (fps was limited to 30 on the capture, I don't like to have my graphics card burning)  So, At the moment, it's better to have a lot of surface/triangles than a lot of entities ... which is good for big scenes, and bad for physic and animation ^^ |

| ||

| This is extreme :D Maybe let the user define the complexity/details in the scene because some of us may not have a graphics card as good as yours to run this... |

| ||

| Are you limited in number of vertices/triangles per surface like Blitz3D ? Also, when can we test it ? :p |

| ||

| I agree, very smart indeed. I can't help thinking there should be an assassins creed guy running along those rooves ;) Nice work, babysait looks as though you have a powerful engine there. |

| ||

| I limited surface triangle and vertex index to Signed Short ... so it can't exceed 32767 triangles and 32767 vertex ... I did to preserve the blitz3d compatibility (and it also consume 2x less memory to store vertex data) But, I know this limit is really annonying ... I probably should convert this to Int, as long as the engine really becomes powerfull with large amount of data much more than with lot of small ones. This capacity comes from BigBang can (and anytime its possible, "should always") store data to graphics memory I also implemented a "find" function in the texture class to try to recycle texture with same specs (from LoadTexture only -> it requires a path) so it doesn't duplicate textures from material that are already loaded (else, it can break everything if you load too much data ... I experienced it while reaching 2 Go Video Ram, my Graphics Card can support up to 3Go, but as 3dsmax was opened with the "Venice" scene which is very huge, it probably consumed a lot of memory too) And it's also more convenient to load multiple b3d than just one of 500 Mo ... and in this case, the materials are almost all the sames, but the texture should not be loaded more than once (static mesh, static textures, there is no point to load them twice or more) For the release, I have to deal with the timeline, and I have to say : I'm in late ! I have made a parser to export the doc, but there is still almost 1000 functions/methods/types to document and I only documented 500 at the moment (which was already a big step). I'm also optimizing the shaders and I rebuilt the scene graph matrix, so there is some issues I should solve easily but will take a bit more time. To be honest, I need to stop myself trying to make better and better and better ... 'cause there is always things to improve, but it should wait for updates as the engine already works. So, I promise the demo release on March the 1st. And the "Release" release will come 2 weeks later, once the demo has been tested by you. (So I can fix any remaining bugs before selling anything) @Ploppy : I made the engine to support my own project which needs to load a big city (not Venice, actually, it's <Le Mans> and it's not related to cars running at all, it's just a MMO survival zombi game ... yep ... one more) with a dark cartoon style which requires some shader effects (outline AO and some more) So, it's pretty robust for this kind of scene, while it will probably be lower for small games that require lots of small entities. I will later add a support for more engine setup, so it can take the best of what we need and optimize the render in this way. (large scene and/or lot of entities) |

| ||

| Why don't you create an option for the user to choose the vertex/triangle index size between 16 and 32 bit? It could be an idea to make this configurable. That way if you wish to use more than the 32767 indices you can, and if you wish to economise memory using 16 bit indices you can also. Just a thought... |

| ||

| That's doable. Actually, it just require 2 or 3 lines of code to add the switch. (probably a flag on the createsurface) [Edit] or maybe, I should keep it on 16 bit and automatically switch whenever we add a vertex index above the SIGNED_SHORT limit (And By the way, limit of blitzmax short is 65535, not 32700 and some bananas ... as they are Unsigned short [0..65535]) As to mention, the b3d file format uses INT to store vertex index, so there is no problem using int instead of short. |

| ||

| I've been testing Ploppy's Hybrid/Hardwired, and modern shaders are really impressive. Playing with marching cubes and an ocean grid... as you can render faster with less entities, the vertices/triangles "no limit" can be useful in these cases, to "group" meshes. I'm not planning to write a game with extreme graphics, but I like to test what an engine is capable of :p |

| ||

| You're right, It's better to have big meshes split into chunks (for fast spatial partitioning) than having several frustum test and matrix multiplications. - I added the automation for the triangle indices that now switch when require (or on demand, but it should not be required unless we want to save a small time when loading surface -> else it needs to rebuild the buffer to accept integers) - I'm thinking about a terrain engine based on chunked LOD (as I've already done one for blitz3d) The heightmap would be a texture object and once send to the vertex shader should deform the vertices of the terrain patchs. So, we could also translate/rotate/scale the texture in realtime. I don't know if there is a need for this, but I thought it could be awesome ... or at least very fun :) But, one step at a time ! I'll deal with terrain engine once the module will be fully downloadable, so I keep the idea in a corner of my head. |

| ||

| I agree that it can be useful to be able to have many vertices and triangles in a surface, but if you go over 32768, maybe there will be problems with some old graphics cards (or with some recent but low end graphics cards), don't you think ? I suppose the best way to know is to test it on different computers. |

| ||

| There is no reason such a limit exist on graphics card side. The only limit is the video memory. on Android (old version until 4.0 I think) you can only send UShort as triangle index, but desktop opengl drivers doesn't have this limit. I had only add the limit because of blitz3d didn't do it, and it keeps low memory usage. As long as it can be disabled (by default) just by not using big surface, there won't be any problems. And only hardware which could not run int arrays won't load a glsl 3.3 context, so whatever the problem would be, it's solved :) |

| ||

| My own technique involves storing the mesh info into a dynamic scratch buffer resident in the main memory. It is by default 1000 entries is size (for vertices the x,y,z,nx,ny,nz,ux,vy,ux2,vy2,rgb and for triangles 3 unsigned ints containing vertex references) for each newly created 'surface', for both the vertex buffer and the triangle buffer which make up the surface info for a mesh. Each vertex/triangle added to the surface will be inserted to the already created buffer. If the newly added vertex/triangle in progress is of a higher index than the buffer's current size, the buffer is incresed in size by a further 1000 entries. Giving a vertex/triangle buffer some leeway means less memory manipulation during mesh creation, meaning a significant gain in speed. Once a render is called, the mesh is 'considered' to be finished with as far as modifs/additions go. If the mesh's surface have just been created or modified since the last render then the buffers will then be shrunk down to the actual size to not to waste memory. If a modification or creation has been made upon a surface the actual real buffers will then be created from these scratch buffers. These buffers are the ones that are sent to the graphics adapter. Once they have been created and sent they cannot be modified since they reside in the gfx adapter - this is why I keep a scratch version in resident memory (very much like in b3d). So, next all vertex and triangle index info is copied over to the newly created buffer destined for it's one way trip to Nvidialand/Atiland. If the vertex count is above 65535, the triangle index buffer created will automatically be configured for 32-bit. Once these buffers have been created, if there is no further modification to be made the buffers will not be recreated in a new render. Of course, if the executed program is not actually modifing/creating meshes but rather loading them from a file without need of further modification, there is no special need of retaining the 'scratch' buffers once the creation of the actual buffers have been made. Although in directx it is possible to create buffers that are to some respect 'shared' by the processor and the gfx adapter the use of these kind of buffers are slower than write only buffers. So to make the most of the gfx adapter's power I prefer to use this technique. I'm guessing that with OpenGL, similar respects have to be made also to memory management/access concerning buffers in general. |

| ||

| Yep, I use the same technique. Arrays are stored in a bank (one bank per data type) and are resized by 4096 bytes (not entry) if required. When the renderworld needs to bind the array, the gl buffer is created after the bank is optimized and fit the real size (if not dynamic flag is set, else, it keeps the bank as it is for dynamic surface) by the way, I also reduice the vertex data size by enabling/disabling independantly the vertex color/tangent/Uv0/Uv1/bones ... so, the default surface contains only an array of coords, one of normals and that's all. other arrays are build on the need. For example, if we use surface.color(index,r,g,b,a) it will enable and build the vertex color array if it does not exists and feel it with default values (1.0,1.0,1.0,1.0 for color) Like this, the city of Venice is half loaded (It didn't load the other half, because it takes time to export from obj format ... and I don't have finished to export it yet) and "only" use 500 Mo Ram/VRam diffuse+normal textures included. (Yep, I know it's already a huge ram size, but there is already 3.5 Million triangles in the scene * and some 8 or 10 million vertices with at least 36 bytes per vertex and some 200 Mo or more ... textures) About the OpenGL memory management, there is different modes to send and store data to CG, it depends on whether you need to frequently update the array or not, so you can load it as static or dynamic, with read and/or write access. Once it's stored in the CG memory, I keep the ram buffer for dynamic meshes, else I would have to get data back from the CG, modify the vertices/triangles then update from the ram to the vram ... it's too long, so keeping the ram buffer allows me to modify whatever I need (with VertexCoords etc ...) and it will be updated automatically when arrays is bound to the CG on the renderworld. It works the same for the textures, using Lock/Unlock to update the pixels of dynamic textures or writepixel without lock/unlock but it's slow as hell :) |

| ||

| Yes, you can also use dynamic buffers (for texture manip too) with dx, but the same speed problem is there as for opengl. This is why I effectively keep a scratch buffer in system ram and prefer using an exclusive one way buffer like you, you get more raw power out of the gfx adapter that way. |

| ||

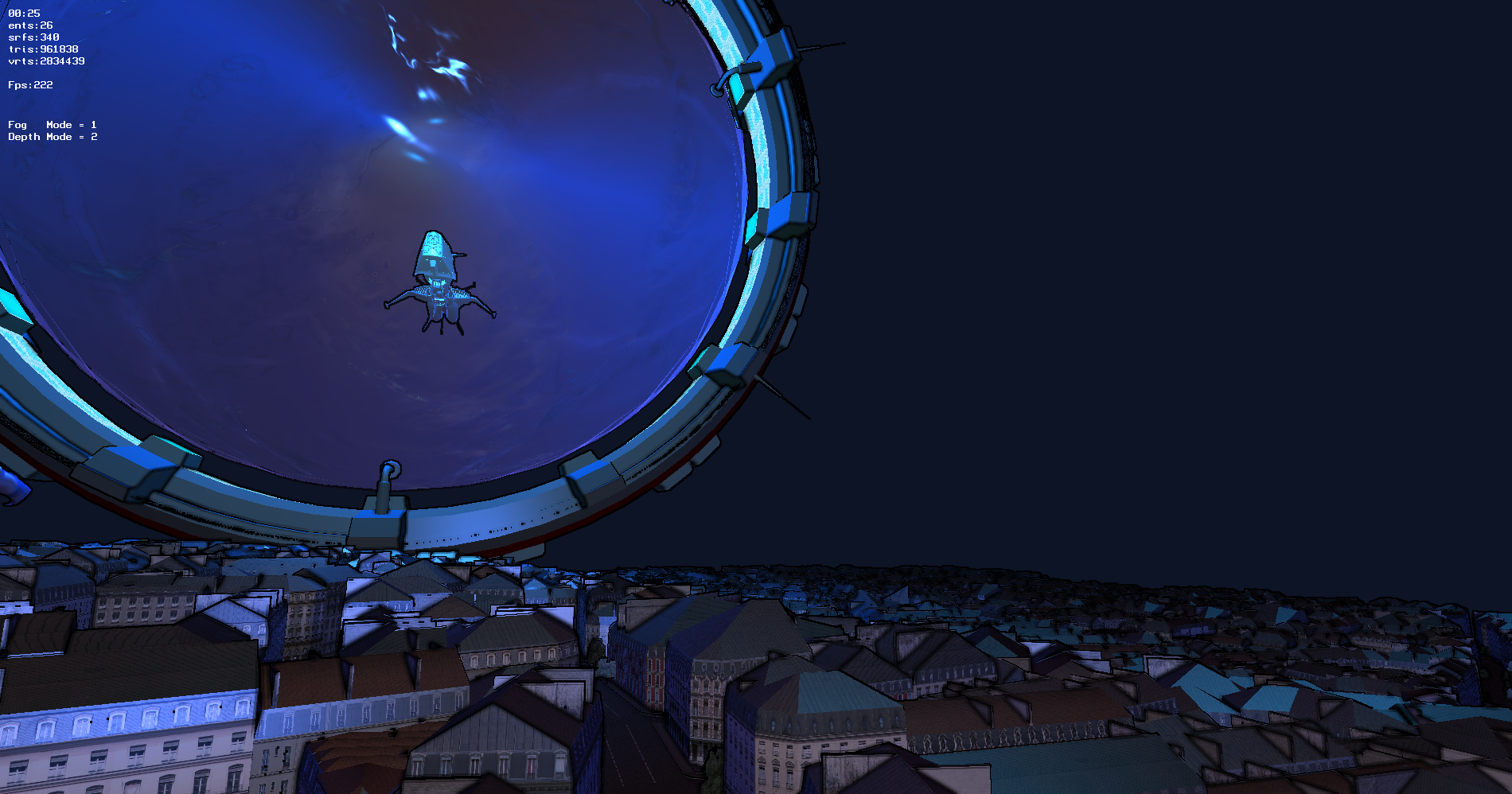

| I forgot to mention about the fog modes 3 modes are available and cumulable 1 is the linear depth fog 2 is the height fog (it doesn't care about the camera position/rotation) 4 is the radial fog (only if 1 active, replace the linear fog) so 1+2 is linear fog + height fog 1 + 4 is radial 1 + 2 + 4 is radial+height and 4 is ... nothing ^^ (4 only works if 1 enabled) Here is a screen where I exagerated the height Fog and set it to (10,-1.0) -> you can set the far lower than the near, it reverse the fog direction :)  |

| ||

| The height fog looks nice, like some kind of mist on the ground. I suppose that these buildings are built procedurally ? But maybe not the church/temple and maybe not the shape of the city ? |

| ||

| It's an "obj" file I downloaded from tf3dm.com The full scene is 4.5 millions vertices - 1.5 Million triangle I bumped it in a dirty way, just to see how it runs. I finished the obj loader, so I can load directly obj files. It works pretty well, but I have to create a very big buffer to load the data temporarily (obj file format is really a crapy format) So, it consumes lot of ram just while loading (then the memory is released on exit on the loading process) I unleashed the beast for a moment just to know how it runs well ... and it runs like a charm :)  and a link to a big image (I don't want to break the horizontal rule, so it will be just a link to the image) Big image And this last one is ... over exagerated ... I loaded 9 times the city (40 millions vertices 13.5 millions triangles) Mega Stress Test Finally, Venise is not Venise without water |

| ||

| It's an "obj" file I downloaded from tf3dm.com The full scene is 4.5 millions vertices - 1.5 Million triangle Ah ok... I thought that you were building everything procedurally ;) Have you checked if the meshes are optimized or not (sometimes some exporters export 3 vertices for each triangle as if each triangle was unwelded, but most of the time this is not necessary because some triangles can share vertices... (depending on their normals and depending on their vertices uv coords, as you probably already know...)) my own project which needs to load a big city (not Venice, actually, it's <Le Mans> and it's not related to cars running at all, it's just a MMO survival zombi game I suppose that you already know the tv series "the walking dead" ? (about surviving a zombies invasion) (quite good imo) If you like this kind of movies, maybe take a look at "z nation" and "survivors" (also quite good imo) |

| ||

| Seems to run well :) I like the fog modes, especially the height fog wich can be useful. |

| ||

| Ah ok... I thought that you were building everything procedurally ;) As soon as I have finished a last part of the engine, I will (i'm currently adding support for polygon modes > GL_LINES/GL_POINTS/GL_TRIANGLES[already include as default "driver" for vertex array] / GL_QUADS / and ... maybe ... GL_POLYGON) At least, when GL_QUADS will be done, it will save 1/3 of vertex index (4 index for 2 triangles vs 6 index with standard GL_TRIANGLES mode). it's not that the engine suffers from polygons count, but ... you know ... optimization is optimization :) And it should enables lower sized files I also started to integrate a part of the shader for Texture projection mode (it overrides the vertex UV to use the screen position of the vertex, so the texture applied will appear "flat") I don't remember why it's usefull, but I just remember it's really usefull ... You'll tell me ^^ In the end, it will just be a texture flag ("TEX_PROJECTION" = 2048 [Alias : TEX_PROJ]), the projection can then be set with SetOrigin/SetScale/SetRotation methods of the texture (those are BTexture methods, not max2D commands) I suppose that you already know the tv series "the walking dead" ? (about surviving a zombies invasion) (quite good imo) If you like this kind of movies, maybe take a look at "z nation" and "survivors" (also quite good imo) Obvious is Obvious :) but ... I have never seen the last one, "survivors", I'll have a look ;) |

| ||

| All I can say is holy f**k!, you're doing a grand job there. ;) |

| ||

| Thanks, I do my best (or at least I try to do the best I can while I'm really tired) RemiD >post #47 This looks better indeed, however it seems that there are some inaccuracies (for example, the shadow at the center does not seem to be applied on the cube) I missed this one : There is no error on the shadow projection, just your "point of view" that miss the actual position of the cubes in 3D space. The primitives are not stuck on the floor, most of them are flying, so the cube that does not receive the shadow is not an error (like Bill Gates would says : "it's a feature"), it's just that it's higher than the shadow projection. And by the way, while not optimized, the small shadow sample can't produice error over than bias offset. It's not based on geometry projection, but depth comparison. |

| ||

| There is no error on the shadow projection, just your "point of view" that miss the actual position of the cubes in 3D space. The primitives are not stuck on the floor, most of them are flying, so the cube that does not receive the shadow is not an error Indeed, i see that now, the cube does not touch the floor ! So, all is well. :) |

| ||