Tile-based game + screen scaling

BlitzMax Forums/BlitzMax Programming/Tile-based game + screen scaling

| ||

| Hello, I'm developing a tile-based sidescroller game using 16²px tiles and sprites variating from 16 to 32 pixels (width or height). I haven't figured out the size of the playable area (width/height in tiles), but it would probably lie around 20x15 tiles (320x240px) to 25x18.75 tiles (400x300px). In order to not have a too small screen and to make things more visible, I would like to scale the screen up to at least 200% size without filtering (to create some sort of retro/nostalgic feeling with almost distinguishable pixels). In my previous version of the game (I started a major overhaul a while ago) I did manage to zoom everything to 200% by adding a constant (int:2) which I multiplied almost every X/Y variable with (drawing tiles, player movement, collusions, etc) and scaled every image to 200%. After that I scaled back to 100% and drew HUD things like text, etc. I think there must be a better way to zoom the screen than my previous method to multiply every variable, but I can't seem to think any. That's where this topic comes in. Does anyone have a clue how to accomplish such thing? It's a bit hard to explain, if you need further info, feel free to ask. Thanks, Shar |

| ||

| with OGL http://www.blitzmax.com/codearcs/codearcs.php?code=2315 |

| ||

| You could go the projection matrix route. You could also go the modelview matrix route ie scaling the model like you would with SetScale. |

| ||

| @ Jesse: That's basicly how I did it, a (static) variable that gets multiplied in X/Y calculations. But the code gets messy when I add that to movement and collision routines. @ ImaginaryHuman: I don't really know a lot about 3D, matrices, projections or views... :( Isn't it possible to grab the screen every(?) frame (pixmaps?), stretch/scale it to the actual width and height of the progam and draw it? And to draw the HUD on it afterwards on a 1:1 scale? |

| ||

@SharQueDo yes GrabImage image2scale,0,0 SetScale 2.0, 2.0 DrawImage image, 0, 0 But it could be very slow depending on the type of game you are making. |

| ||

| GrabImage grabs into a pixmap which means downloading the whole thing over the bus. A faster version, for OpenGL anyway, is glCopyTexSubImage2D(), which lets you grab into an existing texture directly from backbuffer. I found this to be plenty fast enough to do at 60fps while also doing lots of other rendering - I initially used it for my blobby objects - ie draw lots of large images to the backbuffer, copy the backbuffer into the texture, then draw the texture using further filters and blending. |

| ||

| Does anyone know of a DX version of glCopyTexSubImage2D() and ImaginaryHuman do you have a simple example of your solution? Thx! |

| ||

| No idea about a DX version, sorry. I don't have a simple example ... it involes setting up an opengl texture, which in itself is several commands. You can set it up to not upload any actual pixels and it'll just reserve the memory space, ie use glTexImage2D and pass Null as the pixel pointer. Once you have a texture to use (or maybe you can use a blitz `Image` and get access to the texture handle? you can do a simple call to glCopyTexSubImage2D, passing the handle of the texture to it. Then you could DrawImage, or do your own textured quad code. |

| ||

| Hmm, sounds interesting. I can't justify the research to do this for my current game which is a bummer. |

| ||

| Here is some old code regarding textures in OpenGL - this isn't my recent stuff or entirely specific to what you want to do, but it might give you an idea. You'll definitely need to modify it if you plan to use it, and can take some of the irrelevant junk out. This first bit is for initializing a dummy texture that you can use to copy the backbuffer into. The whole thing about `sub textures` you can ignore - it was just my way of using the texture to store multiple small images. Field TextureHandle:Int=0 'The OpenGL handle ID number for this texture Field TextureSize:Int=0 'The total width and height of the main texture Field TextureFormat:Int=0 'The OpenGL internal format for the whole texture Field Filtering:Int=0 'OpenGL filtering code, GL_NEAREST or GL_LINEAR Method InitializeEmpty:Int(Size:Int=64,InternalFormat:Int=GL_RGBA8,SourceFormat:Int=GL_RGBA8,Filter:Int=GL_NEAREST,Subs:Int=4) 'To set up the main texture as empty, ready for sub textures and future 'graphics uploads Remove() 'Remove any previous definition and texture in GL TextureFormat=InternalFormat 'Store the GL texture format Filtering=Filter 'Store filtering TextureSize=AdjustTextureSize(Size) 'Adjust to power-of-two size and store Repeat Until glGetError()=GL_NO_ERROR 'Wipe any previous GL errors Try glGenTextures(1,Varptr(TextureHandle)) 'Create a unique handle ID Catch E$ 'Handle exception If Printout Then Print "Couldn't create new texture handle: "+E Return False 'Failed, exit EndTry Try glBindTexture(GL_TEXTURE_2D,TextureHandle) 'Select the texture glTexImage2D(GL_TEXTURE_2D,0,TextureFormat,Size,Size,0,SourceFormat,GL_UNSIGNED_BYTE,Null) 'Create an empty texture, no border Catch E$ 'Handle exception If Printout Then Print "Couldn't create texture: "+E glDeleteTextures(1,Varptr(TextureHandle)) 'Delete the texture in GL Return False 'Failed, exit EndTry Local Err:Int=glGetError() If Err<>GL_NO_ERROR If Printout Then Print "OpenGL error creating texture: "+Hex$(Err) Remove() 'Delete this texture Return False 'Something wrong, fail EndIf glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_WRAP_S,GL_CLAMP) 'Edge clamping, must come after the glBindTexture() glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_WRAP_T,GL_CLAMP) 'Edge clamping glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MAG_FILTER,Filtering) 'Larger-than texel filtering glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MIN_FILTER,Filtering) 'Smaller-than texel filtering Return True 'Success End Method Grabbing a portion of the backbuffer into the dummy texture (i referred to a sub-texture from which I would get the position and size of the area to be copied).. Function GGrabSubTexture(Tex:Texture=Null,SubTex:SubTexture=Null,FromX:Float=0,FromY:Float=0,Width:Float=1,Height:Float=1,RedScale:Float=1.0,GreenScale:Float=1.0,BlueScale:Float=1.0,AlphaScale:Float=1.0,RedBias:Float=0,GreenBias:Float=0,BlueBias:Float=0,AlphaBias:Float=0) 'To copy a rectangular portion of the viewport into a predefined SubTexture within a precreated Texture 'Changes the current texture Try glPixelStorei(GL_UNPACK_SWAP_BYTES,GL_FALSE) 'No swap bytes glPixelStorei(GL_UNPACK_LSB_FIRST,GL_FALSE) 'No byte swap glPixelStorei(GL_UNPACK_SKIP_PIXELS,0) 'No skip pixels glPixelStorei(GL_UNPACK_SKIP_ROWS,0) 'No skip rows glPixelTransferf(GL_RED_SCALE,RedScale) 'Upload with red scaling glPixelTransferf(GL_RED_BIAS,RedBias) 'Upload with red bias glPixelTransferf(GL_GREEN_SCALE,GreenScale) 'Upload with green scale glPixelTransferf(GL_GREEN_BIAS,GreenBias) 'Upload with green bias glPixelTransferf(GL_BLUE_SCALE,BlueScale) 'Upload with blue scale glPixelTransferf(GL_BLUE_BIAS,BlueBias) 'Upload with blue bias glPixelTransferf(GL_ALPHA_SCALE,AlphaScale) 'Upload with alpha scale glPixelTransferf(GL_ALPHA_BIAS,AlphaBias) 'Upload with alpha bias glPixelTransferi(GL_MAP_COLOR,GL_FALSE) 'No color mapping glPixelTransferi(GL_MAP_STENCIL,GL_FALSE) 'No stencil mapping glPixelStorei(GL_UNPACK_ALIGNMENT,4) 'Bytes of alignment (maybe not needed for grab) If Width=0 Or Height=0 'Row length is sub texture's width glPixelStorei(GL_UNPACK_ROW_LENGTH,SubTex.Width) 'How many pixels in a row (maybe not needed for grab) Else 'Row length is specified width (should usually be <= subtexture's width and height) glPixelStorei(GL_UNPACK_ROW_LENGTH,Width) 'How many pixels in a row (maybe not needed for grab) EndIf GCurrentTexture=Tex 'Store current GCurrentTextureID=Tex.TextureHandle 'Store handle GCurrentSubTexture=SubTex 'Store current glBindTexture(GL_TEXTURE_2D,GCurrentTextureID) 'Select the texture to upload to If Width=0 Or Height=0 'Upload sub texture that is same size as SubTexture object glCopyTexSubImage2D(GL_TEXTURE_2D,0,SubTex.X,SubTex.Y,FromX,FromY,SubTex.Width,SubTex.Height) 'Copy to subtexture Else 'Upload sub texture that is specified with and height (should usually be <= subtexture's width and height) glCopyTexSubImage2D(GL_TEXTURE_2D,0,SubTex.X,SubTex.Y,FromX,FromY,Width,Height) 'Copy to subtexture EndIf Catch E$ 'Handle exception If Printout Then Print "Error trying to grab a sub texture from the backbuffer "+GCurrentTextureID+", "+E Return False 'Failed, exit EndTry Return True 'Success End Function Choosing the texture to draw with it... Function GTexture(Tex:Texture=Null,SubTex:SubTexture=Null) 'Function to select what the current texture is If (GTextured=True) And (GCurrentTextureID<>Tex.TextureHandle) 'Change? GCurrentTexture=Tex GCurrentTextureID=Tex.TextureHandle 'Change texture glBindTexture(GL_TEXTURE_2D,GCurrentTextureID) 'Change in OpenGL EndIf GCurrentSubTexture=SubTex 'Change subtexture End Function Switching on texturing... Function GTexturing(DoIt:Int=False) 'Whether to do texturing If DoIt=True glEnable(GL_TEXTURE_2D) 'Switch it on GTextured=True 'Store it Return Else glDisable(GL_TEXTURE_2D) 'Switch it off GTextured=False 'Store it Return EndIf End Function Drawing a textured rectangle: Function GRect(X1:Float=0,Y1:Float=0,X2:Float=0,Y2:Float=0) 'To simply draw a filled colored quad 'Vertex colors are from GColors 'X1,Y1 = top left corner 'X2,Y2 = bottom right corner Local SubTex:SubTexture=GCurrentSubTexture 'For speed If GShaded=GL_SMOOTH 'Smooth shaded If GTextured=True 'Draw it textured If GBegun=False Then glBegin(GL_QUADS) glColor4ub(GColorRed[0],GColorGreen[0],GColorBlue[0],GColorAlpha[0]) glTexCoord2f(SubTex.BottomLeftTexCoordX,SubTex.BottomLeftTexCoordY) glVertex2f(X1,Y2) 'Bottom left glColor4ub(GColorRed[1],GColorGreen[1],GColorBlue[1],GColorAlpha[1]) glTexcoord2f(SubTex.TopRightTexCoordX,SubTex.BottomLeftTexCoordY) glVertex2f(X2,Y2) 'Bottom right glColor4ub(GColorRed[2],GColorGreen[2],GColorBlue[2],GColorAlpha[2]) glTexCoord2f(SubTex.TopRightTexCoordX,SubTex.TopRightTexCoordY) glVertex2f(X2,Y1) 'Top right glColor4ub(GColorRed[3],GColorGreen[3],GColorBlue[3],GColorAlpha[3]) glTexCoord2f(SubTex.BottomLeftTexCoordX,SubTex.TopRightTexCoordY) glVertex2f(X1,Y1) 'Top left glColor4ub(GColorRed[0],GColorGreen[0],GColorBlue[0],GColorAlpha[0]) If GBegun=False Then glEnd() Return Else 'Draw it solid If GBegun=False Then glBegin(GL_QUADS) glColor4ub(GColorRed[0],GColorGreen[0],GColorBlue[0],GColorAlpha[0]) glVertex2f(X1,Y2) 'Bottom left glColor4ub(GColorRed[1],GColorGreen[1],GColorBlue[1],GColorAlpha[1]) glVertex2f(X2,Y2) 'Bottom right glColor4ub(GColorRed[2],GColorGreen[2],GColorBlue[2],GColorAlpha[2]) glVertex2f(X2,Y1) 'Top right glColor4ub(GColorRed[3],GColorGreen[3],GColorBlue[3],GColorAlpha[3]) glVertex2f(X1,Y1) 'Top left glColor4ub(GColorRed[0],GColorGreen[0],GColorBlue[0],GColorAlpha[0]) If GBegun=False Then glEnd() Return EndIf Else 'Flat shaded If GTextured=True 'Draw it textured If GBegun=False Then glBegin(GL_QUADS) glTexCoord2f(SubTex.BottomLeftTexCoordX,SubTex.BottomLeftTexCoordY) glVertex2f(X1,Y2) 'Bottom left glTexcoord2f(SubTex.TopRightTexCoordX,SubTex.BottomLeftTexCoordY) glVertex2f(X2,Y2) 'Bottom right glTexCoord2f(SubTex.TopRightTexCoordX,SubTex.TopRightTexCoordY) glVertex2f(X2,Y1) 'Top right glTexCoord2f(SubTex.BottomLeftTexCoordX,SubTex.TopRightTexCoordY) glVertex2f(X1,Y1) 'Top left If GBegun=False Then glEnd() Return Else 'Draw it solid If GBegun=False Then glBegin(GL_QUADS) glVertex2f(X1,Y2) 'Bottom left glVertex2f(X2,Y2) 'Bottom right glVertex2f(X2,Y1) 'Top right glVertex2f(X1,Y1) 'Top left If GBegun=False Then glEnd() Return EndIf EndIf End Function |

| ||

| Cool. So just be clear on how this works.... It allows you to effectivly render to a texture in VRAM so you can build up a scene in VRAM, then output that whole texture as normal for the player to see (with extra filters if required)? If so that would be a lot faster than drawing to the screen and doing a GrabImage, right? This would work for Mac versions of my games but obviously I'd need to do a DX version, I guess it must also be possible in DX7. |

| ||

| Honestly, I wouldn't worry about DX7... its really old hat I'm definately going to check out this code.. thanks ImaginaryHuman |

| ||

| I use DX7 for all PC games and obviously OpenGL for all Mac games. Testing showed that GL works on less older PCs than DX, so that's why my PC games use DX. Remember I'm aiming at mass market casual gamers, not cutting edge hardcore gamer PCs. |

| ||

| Yah I wish there was only OpenGL and no DX to consider. Sorry my code up there might be a little messy or has some features mixed in which aren't entirely relevant to achieving this copy-to-texture. Really the only command that copies the backbuffer portion into a texture is glCopyTexSubImage2D(). It's just that it needs some other settings to be setup first, and thus relies on having a texture to use, which in turn needs to be set up, etc, and then you've gotta be able to render, so you gotta build your quad, etc. However, you could probably find a way to use Max2D's existing `Image` as your texture so that all you have to do is use commands like CreateImage(), then look at the Type for TImage and find the openGL texture handle, then use that handle with glCopyTexSubImage2D(). Then you won't need to do any of the texture setup/activation/quad-construction stuff - you can juse use DrawImage. You don't draw to the texture, as such, you just draw to the backbuffer and then copy a rectangle from it into the texture. Note that this is faster than GlCopyTexImage2D() which copies the whole backbuffer (and less efficiently). On an NVideo GeForce 4MX - a bit old hat now, on a 1GHz G4 PPC iMac, the copying to texture was plenty fast enough to do a full screen at 1024x768 and to then re-draw that texture more than once with various alpha testing/blending at 60Hz. That gpu has about 1 billion texels/second fill rate, so as long as you're up around that mark you should have plenty of speed to make use of it. |

| ||

| In brl's max2d.mod, you'll find the file `image.bmx`, in which you'll see that `TImage` is defined. TImage contains a field called `Frames:TImageFrame[]` - it's an array of TImageFrames. I still don't quite get what a frame is, other than maybe a sub-rectangle within the image, like a tile on a sprite sheet, used with LoadAnim. In `glmax2d.mod/glmax2d.bmx` you'll find the `TImageFrame` type which contains an integer field called `name`. name is the opengl texture handle. It's just a number, which OpenGl recognizes as an allocated texture (once the image is created with CreateImage() or whatever). So if you were to use like: Local MyImage:TImage=CreateImage(1024,768) Local Texture:Int=MyImage.Frames[0].name That should give you the opengl texture. Then you can grab a portion of the texture, by calling a function something like: Function GrabToTexture(Texture:Int=Null,FromX:Float=0,FromY:Float=0,Width:Float=1,Height:Float=1,ToX:Float=0,ToY:Float=0) 'To copy a rectangular portion of the backbuffer into a Texture glPixelStorei(GL_UNPACK_SWAP_BYTES,GL_FALSE) 'No swap bytes glPixelStorei(GL_UNPACK_LSB_FIRST,GL_FALSE) 'No byte swap glPixelStorei(GL_UNPACK_SKIP_PIXELS,0) 'No skip pixels glPixelStorei(GL_UNPACK_SKIP_ROWS,0) 'No skip rows glPixelTransferf(GL_RED_SCALE,1.0) 'Upload with red scaling glPixelTransferf(GL_RED_BIAS,0) 'Upload with red bias glPixelTransferf(GL_GREEN_SCALE,1.0) 'Upload with green scale glPixelTransferf(GL_GREEN_BIAS,0) 'Upload with green bias glPixelTransferf(GL_BLUE_SCALE,1.0) 'Upload with blue scale glPixelTransferf(GL_BLUE_BIAS,0) 'Upload with blue bias glPixelTransferf(GL_ALPHA_SCALE,1.0) 'Upload with alpha scale glPixelTransferf(GL_ALPHA_BIAS,0) 'Upload with alpha bias glPixelTransferi(GL_MAP_COLOR,GL_FALSE) 'No color mapping glPixelTransferi(GL_MAP_STENCIL,GL_FALSE) 'No stencil mapping glPixelStorei(GL_UNPACK_ALIGNMENT,4) 'Bytes of alignment (maybe not needed for grab) glPixelStorei(GL_UNPACK_ROW_LENGTH,Width) 'How many pixels in a row (maybe not needed for grab) glBindTexture(GL_TEXTURE_2D,Texture) 'Select the texture to upload to glCopyTexSubImage2D(GL_TEXTURE_2D,0,ToX,ToY,FromX,FromY,Width,Height) 'Copy to texture End Function You might not need all the glPixelTransferi and glPixelStorei's, but I threw them in there. Then all you need do is DrawImage MyImage,x,y (wherever you want). Note that because I used glBindTexture, which selects a texture, and Max2D may be keeping tracking of which is the current texture, and may not call glBindTexture if it thinks it already chose a texture - we might be interfering with Max2D's state system. It might led to the next-drawn image using the screen-grab texture instead of the proper texture. Either draw an empty 1-pixel image somewhere to the screen and then draw the grabbed image, or you could manually preserve state with something like: Local OldTexture:Int glGetIntegerv(GL_TEXTURE_BINDING_2D,varptr(OldTexture)) 'Do your texture grab binding/grab thing here, maybe draw it glBindTexture(OldTexture,GL_TEXTURE_2D) 'You could do other drawing here with DrawImage and then later use DrawImage to draw the grabbed texture safely |

| ||

| This sounds cool and I tried it out but unfortunately MyImage.Frames[0].name will not compile because name is not found... |

| ||

| Hmm. I wonder if it's private? Or maybe I missed something? |

| ||

| Type TGLImageFrame Extends TImageFrame Field u0#,v0#,u1#,v1# Field name,seq Looks like you might need to cast the frame to a tglimageframe first, ie So the code would be: Local MyImage:TImage=CreateImage(1024,768) Local Texture:Int=TGLImageFrame(MyImage.Frames[0]).name You'll need to have opened a screen/window, and be using the opengl driver first before this'll work. You also *might* have to DrawImage the image first before it'll create the texture handle the first time around - not sure. It should return a 1 as the handle of the first-created image if this is the first image in your program. |

| ||

| Awesome thanks, will test out tomorrow. Going home now! |

| ||

| OK tried it out but unfortunately I get a blank screen, not sure why. Perhaps I'm not using it correctly. Care to take a look? Note that I had to do some special code to create the image frame so it doesn't return null. |

| ||

| You're not seeing anything when you draw the grabbed texture because you still have scaling switched on and it's currently scaling to 4-times the size, which will put the tiles way outside of the viewport, so you'll see nothing. Change the scale line to: Global scale#=1 or add: SetScale 1,1 prior to drawing the grabbed texture |

| ||

| The weird thing is that on an alternative computer, in Windows (ATI Radeon HD something), commenting out the alphablend/glhint/glenable polygon smoothing lines, and with images that are just 32x32 with no border, there are no tiling artefacts at all. I wonder if, perhaps, the presence of those artefacts are only there on lower-spec graphics cards? ie the higher cards have more accurate support for the edges of geometry? It seems inconsistent, somehow. And yet on my iMac and on your guy's computers it seems to be a problem. |

| ||

| Ok my bad ... or yours ;-D .... because you drew the tile outside the loop and then were only drawing the pre-grabbed image in the loop, it was never really moving the tiles. If you restructure your loop to inclued a setting of scale to 4 and a drawimages() before you re-grab and re-draw with scale at 1, you'll be good. On a Radeon HD 2400 Pro.. the screen grab of 640x480 takes about 0.4 milliseconds. (the card is about 4-billion texels/second fill rate). You can do almost 2000 grabs per second which seems very fast. Here's the updated version which actually redraws the tiles and regrabs each frame. Remember you don't have to grab the entire screen, you can just grab a rectangle. |

| ||

| SetScale 1,1 Holy Crud it works! Looks great, no artifacts! Well spotted btw.As for why I grabbed outside of the loop, I just want to see what happened if I prepared a set of tiles drawn at integer coords, then grabbed them and then drew them at floating point coords and scaling, and the test worked. Also I wanted to safely do the oldtexture binding before I began using the texture below. Of course for a scrolling landscape I would need to render the source tiles each frame (but at integer coords to avoid artifacts, not at floating point coords like in your tweaked version of my code) so I could grab and re-render at floating point coords with the grabbed texture. Actually your version seems to be showing the edges jumping as if at integer boundaries which is weird (and it's scaling 4x faster than my version), because if you get my version going (with an appropriately placed SetScale 1,1) it's perfectly smooth, and slower. I thought this might be to do with DrawImages using x, so I removed that but it's still doing it. So I wonder if this is to do with the call to glGetIntegerv or the SetScale scale,scale being outside the loop in my version? Anyway it's not giving the desired result for some reason. (Edit, I fiddled with it some by moving glGetIntegerv inside the loop but it's still not right, something funny is going on...). So, anyway, I have a related question. If I did want to render new tiles each frame and grab each frame, would I need to create MyImage each frame too? I guess not, all I need to do is precreate an empty MyImage and then do:

Cls

DrawImages()

glGetIntegerv(GL_TEXTURE_BINDING_2D,Varptr(OldTexture))

GrabToTexture(Texture,0,0,ScreenWidth,ScreenHeight)

glBindTexture(OldTexture,GL_TEXTURE_2D)

DrawImage MyImage,x,0

Flip 1

This is pretty much what you had anyway (minus the SetScales) but it's giving the weird jumpy edges. Hey checkout something weird I discovered; comment out DrawImage MyImage,x,0 and you'll see the texture repeated (and mirrored) infinitely in one corner! So now to get a DX version. Perhaps Indiepath's Render to Texture module would be good source material? |

| ||

| In the version I posted up there I moved the drawimages() into the loop so it actually draws/moves/scales them on the fly as it draws then to floating point coords, hence why there is the whole-pixel edge jumping problem. ie it's what it would look like if you grabbed images drawn at floating point coords. It doesn't fix the artefacts and it's not supposed to. I think what you were trying to do was fix artefacts by drawing tiles only to integer coords and using the filtering of the grabbed texture to smoothly reposition them to float coords. Your method does work for integer positioning, but as soon as you try to draw the original tiles to float coords the artefacts are still there. So I suppose you could make a tile engine where you draw all tiles at an integer coordinate, then you grab the screen, then you re-draw the whole screen grabbed texture with a little bit of float offset (0..1 pixel in x and y). That would presumably fix any edge problems. One note though... bilinear filtering is not calculated in the same way that polygon smoothing is calculated. The quality is much poorer. You can see that on your original demo where the space between the tiles visibly shifts around due being bilinear filtered instead of fully antialiased. The infinitely repeating texture is probably because when you drawimage it does a glbindtexture. If you don't do that, you are drawing to the grabbed texture? Or... you're not clearing the screen and are drawing on top in an infinite feedback? Or something. You figure it out. ;-D |

| ||

| So I suppose you could make a tile engine where you draw all tiles at an integer coordinate, then you grab the screen, then you re-draw the whole screen grabbed texture with a little bit of float offset (0..1 pixel in x and y). That would presumably fix any edge problems. Yes that was my intention from the start of using your OpenGL code, I don't need to draw the source tiles at subpixel coords. It works very nicely in fact, but only when I grab the texture outside the loop...With your code if I delete the drawing at sub-pixel coords from DrawImages() it still has the edge jumping issues, so something weird is going on...I think it has to do with the scaling. I'm not sure BMax or the GPU knows which texture to apply the scaling to any more, something like that... |

| ||

| I think its like morier patterns produced from the scaling algorithm. I really do believe the only way around this is to treat the tile system like a terrain, except that there would be no z axis changes to the mesh. Maybe thats a better way to explain it |

| ||

| Again we need more than just to say `use a mesh`. We need to know exactly how, and why doing so would work. |

| ||

| OK.. Using Blitz3D terms here... not sure if they 100% apply to DX9 or OpenGL but the concept should be similar... If you create a mesh and then add a surface for each tileset used... assign a brush to the surface (containing the tileset texture) Then add appropriately positioned vertices and triangles to make each "tile" within the surface that contains the appropriate tileset texture. Assign UV coordinates to the vertices to make the portion of the tileset appear within those triangles. That should make a tile grid (similar to a flat terrain) which can then be positioned for the camera to view... move the cam in to zoom in, out to zoom out, and even rotate it... |

| ||

| I just got miniB3D working on my workstation after some MingW woes and forgetting that I was using a beta BMax in a different folder lol, so if I get some time I will attempt to add a renderer to TileMax for this idea. The interesting thing about this method is, that if it works, most of the map would not need to be touched again after initially set up... other than cellular modularization for speed purposes... scrollers, animated, and parallax tiles would need to have the vertices UV coordinates updated on the fly. God, I almost forgot that I will need to have 4 layers and that means some playing with the Z order and the perspective issue that may ensue.. ugh |

| ||

| GreyAlien - If you draw to anything other than integer coords, and if your scaling is anything other than a power of 2, you're going to see the edge jumping problem. If you comply to those rules you should not see any popping. You grab your integer-coord-multiple-scaled tiles into a texture and then you can draw that texture with any scaling you like. You can't do float scaling at the time of drawing the tiles, only when you draw the grabbed version. Blitz and the GPU are not confused ;-) Can you post your version of code which still has a problem? Skully - There are a couple of limiting factors to what is possible. Firstly, when defining a piece of geometry, like defining the vertices of a quad, or a triangle strip, or mesh or whatever, you are not allowed to change textures. You have to choose a texture first then start defining the geometry. That means it is impossible to create any kind of geometry that has more than one texture. You have to define separate pieces of geometry and switch textures between them. The second issue is that due to not being able to switch textures mid-geometry, you simply cannot create a `joined-together` mesh (as far as the gpu or api is concerned) that uses multiple textures. They have to be separate. With that in mind, I don't see any benefit whatsoever to defining a single mesh to handle multiple tiles. Let's say you want to draw an area of 8 tiles across and 8 tiles down. You draw these tiles to a single texture. Then you create 1 quad and fill it with that texture (like what GreyAlien is trying to do). The results you'll get by doing this as one quad are exactly the same as how it's going to look if you split the quad into thousands of small pieces. No matter how you define the geometry, you can still only map one texture to it, so all you're now going to have to do is recalculate texture coordinates across the various vertices in the mesh so that you don't get distortion. And the end result will be that you're looking at your texture as if it were just a regular rectangle - just like a single quad. There is no benefit to using a mesh except to unnecessarily increase the number of triangles with absolutely no usefulness other than that you can now warp the texture image by moving the vertices to unaligned positions. What it sounds like you're saying, is that somehow you can create a grid of quads, and apply individually different tile textures to each quad in the grid. But you can't do that. If Blitz3D is letting you have the impression that you're doing this, then behind the scenes it surely must be just drawing lots of separate quads with texture changes in between them. And in so doing, the gpu has no awareness of the other quads in order to consider them, or their textures, when calculating the filtering or edges. It would act like just drawing separate individual quads. When you use a `mesh` like this in Blitz3D, is it 100% free of the artefact problems that BlitzMax has? ie when you draw to float coordinates do the edges sometimes pop to fill another whole pixel? And when you put two separate tile textures next to each other randomly does it properly antialiase the edges together with no artefacts or shifting or background gaps? If it does, I'd like to know how it's doing it. Is Blitz3D a DirectX-only system? In terms of a flat terrain (as if viewed from above), if I want to pull individual tile images from a pool of images, and have them show up in random patterns/arrangements to produce the overall screen, that is impossible without defining each tile's geometry separately in OpenGL, even if the tiles are like a spritesheet on one texture/surface. Otherwise it would be attempting to interpolate from one tile's corner way over here to another tile's corner way over there with strange results. You'd need separate texture coordinates at the corner of each tile for every individual tile, not shared coordinates. So I'm still to be convinced that meshes will help in any way. But I'm still open minded to hear if it is possible in some way. |

| ||

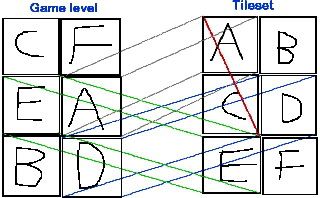

This little example shows what happens to the texture coordinates. On the left is the game level we're trying to assemble, using the tileset on the right. The tileset on the right is a set of 6 tiles which are actually drawn in this arrangement on a single image/texture. We want to pull from the tileset the appropriate tiles to assemble the image on the left. You can see as indicated by the red line, that it would be necessary for the top left corner of tile A to be exactly next to the top right corner of tile E. However, tiles A and E are nowhere near each other in the set of tiles. So if they share a vertex in the game level `mesh`, that vertex only has one texture coordinate. It can't be the coordinate of BOTH tiles's corners at once because they're not next to each other. Bigtime problems will ensue. Thus it requires that every tile have its own set of four corner coordinates to anchor it to the appropriate part of the tileset texture. That means you have to create individual quads, one per tile, which means they have no awareness of each other, which means they can't possibly blend properly without us taking extra workarounds. So a mesh is not going to help here. Even if each tile in the tileset were on its own texture, you'd still need not only separate quads but also texture changes between quads, which won't help with any kind of `joining together` or proper edge blending at float coords. Now, it's true that if you draw the game level by drawing individual separate quads at integer coordinates, into the temporary assembly texture, THEN if you were to map that texture onto either 1 quad or 1 mesh of quads, it would appear to render one tile per quad, but why bother when one quad will do it? Although yes, tile edges would blend perfectly across the mesh, due to it sharing and having knowledge of the surrounding tile pixels, it will do that anyway without the mesh. My point is, you can't mangle the texture coords to get a single mesh to draw multiple random tiles. So what case is there left for using a mesh? I think probably the only idea behind using a mesh to solve tilemap problems is that multiple tiles will be on the same single texture which is used to render the entire mesh. One texture, one mesh. One texture with multiple small tiles on it. And yes it would blend the internal edges properly. But there's no benefit over using a single quad. Also the mesh's size is limited by the size of the texture. That means any sizeable game level will require multiple textures, which means they're going to have separate edges, which means they're going to have issues with borders not matching up. |

| ||

| Well, the problem is clear in your diagram above... you need to align the vertices better.. you have large gaps between tiles and some are squished together and the source tileset also has an alignment problem for the individual tiles (they should all be a power of 2) LOL JK, I couldn't resist Back on focus, I'm sure this is how I got my Terrain system to work when I added roads... albeit it was a few years ago. I think my best bet here is to just test it... I recall that as long as all the tiles aligned side by side were from the same surface they didn't suffer from any seams or anything. I'll test this out and see what happens. I am getting what you are saying... but something tells me it will work... or is that ringing in my ear? ;) I'll admit that my 3D experience is entirely in Blitz3D and yes, its DX7 |

| ||

| So a surface is a texture? And a mesh is like a single `heightmap`, ie one texture covering a square of terrain geometry? |

| ||

| No the mesh is like the root object that surfaces get attached to, those surfaces contain vertices, triangles (created from vertices), and the brush (texture + processing) so each tileset(texture) gets attached to a surface, all instances of tiles within that tileset would be defined within that surface |

| ||

| Grey... in your code, you need to first of all change the line: DrawImage MyImage,0,0 because you had it as DrawImage MyImage,x,0 which draws the grabbed texture at scrolling coordinates, on top of the already scrolled coordinates of the original tiles. You should be drawing tiles to int coords if you plan to draw the grab at an offset, and if you do that then the grab should presumably only be drawn to float coords between 0 and 1. Or you could draw tiles to multiples of the tile width and then let the grabbed texture draw to floats up to the tile width. But right now you have the grab output scrolling at the same time as the tile drawing. Also using the original images you'll get the edge artefacts, you need to use the ones with the 1 pixel border ie 1b and 2b. Also I noticed that the confusion you have is that you're using the projection matrix to scale *everything* at the same time as trying to use setscale to scale the model. Because of your projection matrix, the tiles that get drawn still draw at a scaled size, regardless of the fact that setscale is at an integer amount, causing the tiles to overlap partial pixels. Then you also draw the grabbed texture at projection-matrix-scaled position and size. The latter is okay, but drawing the initial tiles with projection scaling at non-powers of two, will cause them to have edge problems. If you insist on using the projection matrix to scale the screen, then you'll need to set it to a non-zoomed matrix while drawing your integer-positioned tiles, and then switch it back to zoom mode to draw the grabbed texture. Or don't use projection zoom, use setscale instead. Setscale uses the modelview matrix. If you want to use zooming of the projection matrix you'll have to expect that your tile edges may end up at float positions, so would have to then deal with the edge jumping problem using other means. |

| ||

| Try this version, Grey.... I have disabled the projection matrix zoom and instead use setscale to zoom the grabbed texture. Tiles are drawn to integer coordinates, unaffected by zooming. Polygon smoothing is not needed. One thing that springs to mind though, is that if you want to draw your tiles to whole integer coordinates, and if those coordinates get modified by a projection matrix (which they would do), this prevents you from using the projection matrix to deal with aspect ratio correction. e.g. if you were to shrink or stretch the tiles to compensate for a different ratio, it's going to cause the tiles to draw to non-integer coordinates, producing problems. I think the only way to do aspect correction at the same time as perfect tiles is the method I suggested whereby you draw tiles to float coordinates and use the border matching plus the polygon smoothing in order to stitch things together properly. You wouldn't need the grab to texture, but maybe you can use it to draw changed/new tiles to the backbuffer then grab their rectangles into the cache texture. |

| ||

| Skully, I looked at the B3D manual a bit to understand what you were saying. So a mesh is a container object that holds various surfaces, and a surface contains vertices, triangles made by connecting those vertices, and up to 4 layers of texture per surface. It sounds fine up to the part where you have more than one surface per mesh. I'd like to know how B3D renders the different textures to make them join together properly without problems, and whether it is able to do that. I guess we'd need to see a B3D example. I also thought it'd be fairly easy to do an OpenGL mesh demo, you just have to set up an index array, a vertex array and a texture coord array, and then use glDrawElements() to draw it. It would use 1 texture and spread it across the mesh. Anything inside the mesh bounds would have smooth edges between subtiles, but the edges of the mesh will still have issues. So I still don't see any reason to even try it. |

| ||

| @ImaginaryHuman: I had already altered DrawImages() so that it was ignoring X, therefore it was drawing the source tiles at integer coords - so I know that wasn't the problem. I was experiencing bad integer jumping at the edges but scaled up, it was weird. However it does seem that there was a problem in my code with SetScale and projection matrix being used at once (I've used them both before in Unwell Mel and it was fine), so thanks for bringing that up. Here's the final code: I'm deliberately drawing the source tiles scaled (whilst projection matrix is off) just to get larger tiles on screen for the test, of course I wouldn't do that in a final tile engine. The source tiles are drawn at integer coords. Then I grab them, set the scale back to 1, but use the projection matric to zoom in (to simulate a full screen zoom special effect), and the end result is very nice. Very smooth, no integer jumping, perfect for a great looking game. Now I just need a DX version to make it commercially viable for me ;-) |

| ||

| ImaginaryHuman, I'm currently making an example using matrix code found in the samples folder of Blitz3D... I don't have a lot of time today so it won't be done today unfortunately. Big family... not much comp time. But it shouldn't take long once I can sit and concentrate on it. |

| ||

| Looks like some similar conclusions were drawn in this old thread: http://www.blitzmax.com/Community/posts.php?topic=66157 - ie to draw to integer coords as a grid of tiles (maybe call it a mesh if you like) and then grab that into a texture (or render to texture) and then draw the grabbed texture. |

| ||

| Grey - yes, looks fine :-) Notice that you don't need the polygon smoothing or the 1-pixel border around tiles, using the approach you're taking. The only downside though is that when you're zooming in you don't see extra texture detail. That's because you're taking predrawn tiles and scaling them up with the projection matrix. However, you could use tile images that are, say, double the resolution, draw them at twice the size - you'd need to fill 4 screens. Grab each screen into 4 textures or one big one, then draw it scaled. It would look better when scaled up. But it looks pretty decent as is if you're only using it for occasional zooms. So just to recap, so far we have 2 solutions. 1 is to duplicate tile borders into adjacent tiles so that when you draw them they properly filter, with maybe 1-pixel border and polygon smoothing activated, and maybe drawing to a larger cache texture first. 2 is to draw to integer coords either by rendering to texture at int coords or drawing to the screen and grabbing it, then draw the grabbed texture at float positioning. Seems like both methods will work depending on your needs. Method 1 is probably more efficient since it doesn't need a fullscreen grab. I was reading through some old B3D threads about this topic and it seems like much the same issue exists there when using float coords. Grey, all you need now is some DX render-to/grab-to texture code - I'm pretty sure there is already such a module around here somewhere. Looking towards whatever you're demoing, Skully. :-) [edit] Note, so long as you open a 32-bit screen/display/window, it should grab the alpha channel from the backbuffer. However I'm not sure if drawing to the screen's alpha buffer is enabled by default. It's easy to do. Then it'll let you draw alpha values into the screen's alpha and grab them, so that you can draw multiple layers of tiles with alpha blending per tile/pixel.[/edit] |

| ||

| Funny that we discussed most of this 2 years ago: http://www.blitzmax.com/Community/posts.php?topic=71484 I might try a test with glDrawElements() - it does let you specify shared vertices even for triangle strips in separate `rows` in the mesh. However, I think all it's going to do is let you wrap one texture across the geometry, so wouldn't really be any different than drawing it as a quad. Everything within the texture would look right, but not if the edges are visible. We could use one larger-than-screen texture and refresh it every frame with tiles. I don't see that making it a mesh will help tho. |

| ||

| The whole map is bound to one point that can be moved if its a mesh... or am I missing something? |

| ||

| ImaginaryHuman: Yeah haha, we have discussed it a few times. Must be hot topic for us. Similar conclusions but this time I have working OGL code which is start. If I have time to get it working with DX too, that would rock. Also I need to speed test it. Good point about drawing using alpha. I don't need that right now but I could see it being useful for sure. I'll be interested to see your next test. |

| ||

| Skully, I'm not sure what you're asking. Are you saying that you can scroll through the tilemap level by moving the mesh? Yes you can. But so can you if it's not a mesh. So.... ? Or am I missing something too? :-D Grey - DX should be easy since all you need is a DX-based render-to-texture or copy-to-texture, and I am sure several other people have already been playing around with various versions of that code on these forums. Good luck. |

| ||

| OK, now I think I'm just not understanding something about the low level objects... I thought all 3D objects started with a mesh? be that a quad (which is 4 vertexes and 2 triangles?) |

| ||

| Grey - I don't think I'm going to bother with a mesh test. I just can't see any reason to. I know already that it's going to require more geometry than is necessary, and that the tiles (on a single texture) within it will have perfect edges, and that the outside edge of the mesh will not have perfect edges. Someone did a test already in that older thread that showed this would happen. Your idea of being able to give the gpu a bunch of geometry and a bunch of textures and it would then render them `afterwards` with proper cross-references is just not possible. You get one texture per piece of geometry, that's it. If your texture is big enough to hold a screenfull of tiles, then good for you. But you only need to draw it as a quad. A mesh is a waste of time. Skully - A mesh is a group of more than 1 primitive objects sharing some vertices. e.g. a strip of triangles, or a strip of quads, or multiple rows of a triangle strip with shared vertices along the edges. *A quad is already a mesh, composed of 2 triangles.* As I mentioned in that older thread, with a two-triangle mesh the interior shared edge of the two triangles blends perfectly together because it's one texture across the two triangles. It's just that you can't change to another texture or tile image without ending the geometry, and anything not sharing an edge gets the ugly edge problem at float coords. So making the mesh out of more than 2 triangles, say 4 rows of 8 triangles to represent 16 tiles, isn't going to change anything. The interior of the mesh will look fine but the outside edges will not. As I keep saying over and over, you don't need anything more than 1 quad to draw a texture if the texture already contains multiple tile images. Adding more triangles changes nothing at all. Make it a mesh, make it have millions of triangles, it still will behave the same. Just look a how a quad renders/performs and you'll see how a mesh performs. I consider that a case closed. |

| ||

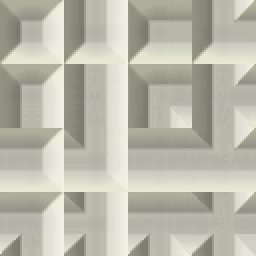

If your texture is a tileset such as: but larger individual tiles Can you not set the UV coordinates of the vertices (assuming 4 per quad) to only display a portion of the tileset. Since they would all belong to the same surface they would get processed together at render time. the left/right/bottom/top Vertices on adjacent tiles would have exactly the same coordinates but different UV mappings. I can't wait to test this.. I'm only getting a minute here, minute there today (bathroom reno and BBQing at a school function)... so all I get to do is speculate right now :( |

| ||

| That is a nice tileset |

| ||

| That is a nice tileset. |

| ||

| Yeah, a pretty extensive set of tiles there! But basically I'm going to say no. If you want each quad to have unique texture coordinates at each corner of each quad, you HAVE to have separate quads. Otherwise, if vertices are truly shared, there is only one texture coordinate per vertex, not 4. You certainly can draw lots of individual quads next to each other, and you can position the vertices of those quads to be exactly on top of each other, but to the hardware every quad is still separate. It's because they're separate that the hardware does not have knowledge of other quads. While it's working on one quad, all it knows about is one quad and can't take neighboring quads into account, or their textures. The second issue is that if each quad has four unique texture coordinates, representing the corners of tiles which are in *entirely separate random locations* in the tileset texture, there is absolutely no interpolation between the edges of the quads. The only way for there to be intepolation at the edges - which is needed in order for two edges to share a pixel correctly, is for the source texels/pixels to be adjacent to each other in the tileset texture - which they're not. What that means is that in order to share texture coordinates at each vertex, the source tiles must *already* be next to each other in their correct tilemap positions prior to drawing the geometry. They won't be. The whole point of a tileset is that you can use any random tile at any time in any pattern. A mesh does not allow you to do that. What you CAN do, is define lots of *separate quads*, one at a time, and map texture coordinates to each of their four corners, so that an individual tile can be drawn. But by doing so, there is no taking into account any neighboring tiles or their pixels. There IS benefit to having a tileset on one texture, because you then don't have to switch textures in order to draw lots of tiles. While this will significantly speed up the drawing of *individual* tiles, you still can't use this to output a mesh of random tiles - not because of the tileset being on one texture, but because *a vertex has to share texture coordinates if you want the edges of each tile to render properly*. Think about what is happening to the texture coordinates at each vertex. If you have one pair of coordinates X,Y at a vertex, there is then interpolation between those coordinates and the coordinates at the next vertex. That's was produces a stepping through the tile's texels. Interpolation occurs *between* vertices, not AT vertices. As soon as you introduce four texture coordinates at a given location, the only way to define that is to `end` the previous quad and start a new one. Ending a quad does let you change to a different texture/coords, but it does not allow the bilinear filtering to continue. It is the bilinear filtering that allows two adjacent texels to share a pixel. Without it there is a `hard edge`, blended between the texel from the tile and the background pixel. That's what produces a temporary transitional color which is 50% the texel and 50% the background. Then along comes the next quad, separately, and it then blends 50% new texel with 50% of the previous merge result. That results in 25% the previous tile's texel, 25% the original background, and 50% the new tile's texel color. This is what produces the murky cross-blend color inbetween tiles. The cause of the occasional `jumping` of a whole new row or column of pixels at the leading edge of a moving tile, comes down to the way bilinear filtering works, which is itself based on the way that `fragments` are generated by the GPU, which is based on the geometry. I don't know if you're familiar with the OpenGL pipeline and how geometry gets turned into images. You define some vertices, whether it be one triangle or lots of triangles separate from each other, or lots of triangles joined together. You don't really define triangles, you define vertices. As you specify one vertex, you can provide a few pieces of information. 1) An RGBA color which will be associated with the vertex, 2) An x,y coordinate within the currently selected texture, 3) A surface `normal` defining what direction the `surface` surrounding the vertex is pointing (for lighting etc), 4) A position within a field of fog, and finally 5) The position of the vertex in 3D space. Once you define the position, that concludes the specification of the vertex and you move on to the next one. A basic example of a mesh comprising two textured quads joined together next to each other could look something like this (I didn't bother with changing vertex colors, and internally this becomes four triangles in a strip): glBegin(GL_QUAD_STRIP) 'draw the left quad glTexCoord2f(0.0,0.0) 'set which coordinate within the texture will be anchored at this vertex glVertex2f(50,50) 'top left corner glTexCoord2f(0.0,0.5) glVertex2f(50,100) 'bottom left corner glTexCoord2f(0.5,0.5) glVertex2f(100,100) 'bottom right corner glTexCoord2f(0.5,0.0) glVertex2f(100,50) 'top right corner 'Finish the right quad - it shares two vertices from the left one glTexCoord2f(1.0,0.5) glVertex2f(150,100) 'bottom right corner glTexCoord2f(1.0,0.0) glVertex2f(150,50) 'top right corner glEnd() I might not have the sequence of vertices correct but you get the idea. Calling this code (after setting up a viewport and texture) will generate 2 textured quads on the screen. They will share the two middle vertices. The texture will be spread across the two quads, ie across all four triangles in the mesh. Since there are vertices sharing the same texture coordinates, the color of the pixels at those coordinates will be exactly those in the middle of the texture image. Then any pixels to the left will have their coordinates interpolated across the left quad, and likewise with the right quad to the right. Since they share vertices in the middle, bilinear texture interpolation will ensure that where the two `quads` join there will be an exact calculation of pixel coverage based on contributions from the 2 texels that straddle either side of the center pixels. The bilinear filter will just do the same thing it does in the middle of a quad, continuing to calculate what color the center pixels should be based on coverage from the texel to the left and the texel to the right. This is great. But, there is a limit. At the far outside edges of the two quads, where there is just empty space and no more quads, the bilinear filter doesn't have any pixels to blend between. It instead looks to the background pixels (or actually it might just ignore the background completely and give 100% contribution from the texel color, or compared to black). Since it has to get and modify at least part of the background pixel, this makes a 32x32 tile affect a 33x33 area. It can't write a partially-covered pixel value to `part of a pixel`, it has to write the whole pixel even if the pixel is barely covered by the quad's edge. That means the edge pixel, outside of the 32x32 area, has to be operated on as a *whole pixel*. What does whole pixel mean? The same thing as 1.0 extra pixels around the edge. What is 1.0? The same thing as 1 - an integer. That's why the edge seems to jump 1 whole pixel at a time. As soon as the quad is moved even slightly in a given direction, it now partly covers another pixel, so the whole of that other pixel now becomes involved in the bilinear filtering calculation. This is actually made even worse by the fact that the tile is actually only 32x32 pixels. Where is it going to get a 33rd pixel from? When the left edge of a quad still covers part of a pixel, and the right edge of the quad is starting to overlap another pixel, there are now portions of the overall 33x33 area that are outside of the bounds of the texture. When the bilinear filter tries to combine the edge pixel with the background pixel, it *should* (if it were correct) calculate how much the pixel is covered by the quad, and then scale the texel color appropriately, and then alphablend it with the background. But it doesn't do that. Instead it clamps the texture coordinate to within the tile, considers the edge pixel to be covering 100% of the background pixel, and then draws a whole pixel to the background. The edge pixel appears duplicated, or streaked, or stretched, in order to make it possible to cover `part of` a background pixel. The bilinear filter can't cross-fade between the background pixel and `part of` an edge pixel. It can only crossfade between the value of two whole pixels. It would be like a bilinear filter on top of a bilinear filter. In OpenGL, enabling polygon smoothing is, in effect, a bilinear filter on top of a bilinear filter. It takes the whole of the background pixel, calculates *how much of* the edge texel covers the background pixel, scales the texel color and alphablends it with the background. This gives it the appearance that it is properly filtered at the edge (ie antialiased). Polygon filtering HAS to be used if a) the edge of a piece of geometry is visible onscreen, and b) you are drawing to float coordinates. Without it the edges just keep doing their ugly thing. (unless you draw to int coords). So yes, a mesh (triangle strip/quad strip) will show images with proper edges within all but unshared the edge pixels. 1 pixel around the inside, unshared edge of a tile is going to be included in the incomplete bilinear filtering of the existing background. This especially is evident at the leading edge of a moving tile. The leading edge is a combination of part of a tile texel and the background pixel. Since the leading tile texel is partly overlapping and moving into/over the background pixel, that edge texel is going to be between 1 and 99% overwritten by the result of the mis-blending. That means the leading 1 pixel border around the moving tile gets overwritten with the wrong color onscreen. This is why GreyAlien's idea to add a 1-pixel manually-added border around the edge of a tile, with 0 alpha value, avoids this problem. However, you shouldn't need to do this... you should instead use proper texture borders which is a feature of OpenGL, where you put the pixels of the background at the edge, into the hidden border of the texture, so that bilinear filtering still operates on it and produces the correct cross-fade. But Max2D is not set up to use these borders, so faking it with a manual border does the same thing. So if you have one very large bigger-than-screen texture with predrawn prepositioned tiles on it, you can draw it across the screen either with a quad or a whole mesh of little rectangles. Either way it will look the same inside the texture, and bad at the edges if they are visible. Let's say they won't be visible because your texture is so big. Well ok. But that still doesn't explain how random tiles can end up in their final positions alongside each other just by changing text coordinates. Certainly that won't happen. You get one texture coordinate per vertex, not four. If you want four, the vertexes cannot be shared. If they're not shared, there will be edge problems. If they are shared, you have to have the tiles next to each other in the texture already. You cannot pull random tiles from a tileset texture and have them show up next to each other with perfect bilinear filtering by using only one texture coordinate per vertex. The texels that need to go next to each other are at different locations in the texture. It just doesn't work. It's impossible and it doesn't make sense. I think what you're hoping for is that you can put separate quads next to each other, each with 4 texture coordinates of their own, and do so in a way that the billinear filter will just automatically work across their edges. It won't. The filter only works on 1 texture at a time, on one piece of geometry at a time. To make a quad you have to end the geometry and start a new piece. They are not shared or connected. Being shared is mutually exclusive to each tile having its own set of texture coordinates. It's either shared or not shared, you can't have it both ways. There is simply no way to define separate quads with shared vertices. |

| ||

| That is a freaking nice tileset. |

| ||

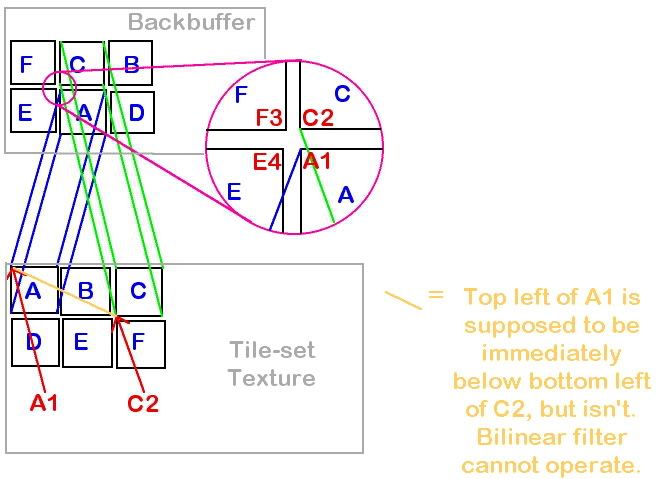

A diagram may be in order: To show tiles properly, you have to define the coordinate of all four corners of the tile. The only way to do that is to have four texture coordinates per vertex in the geometry. The only way to get four per vertex is to have *separate vertices*. Separate vertices mean they are not shared. This rules out the use of any kind of mesh. You have to draw separate quads which means the texture filtering will stop at the edges of each tile instead of blending with its neighbors. If tiles A and C were to share a vertex, there would be no way to define the fact that the two tiles are located in different parts of the tileset texture. *IF* you were to try to share the vertex, by giving it the coordinate of Tile A's corner, then you would see a very skewed image interpolated between the top corner of A and the bottom corner of C - as shown by the light orange line. This would display distorted and squished parts of various other tiles. It doesn't work. The only way to get perfect tile edges is to either draw the tiles to integer coordinates then grab to a texture and redraw the texture at float coords, OR use proper texture borders with polygon smoothing and draw the tiles to float coords, one quad at a time. |

| ||

| So what happens when you do something like... Pfffrrrt... had to do some reading on those 2f's.... now I see I think .. 2f no z axis vs 3f for adding z axis... what happens when you do... ' first quad glTexCoord2f(0.0,0.0) glVertex2f(50,50) 'top left corner glTexCoord2f(0.0,0.5) glVertex2f(50,100) 'bottom left corner glTexCoord2f(0.5,0.5) glVertex2f(100,100) 'bottom right corner glTexCoord2f(0.5,0.0) glVertex2f(100,50) 'top right corner ' second quad glTexCoord2f(0.0,0.0) glVertex2f(100,50) 'top left corner glTexCoord2f(0.0,0.5) glVertex2f(100,100) 'bottom left corner glTexCoord2f(0.5,0.5) glVertex2f(150,100) 'bottom right corner glTexCoord2f(0.5,0.0) glVertex2f(150,50) 'top right corner glEnd() |

| ||

To do that, you must start with:glBegin (GL_QUADS) A GL_QUAD is a single, separate quad. Each set of 4 vertices defines the four corners of individual quads, which can be anywhere on the screen. You're just defining several of them one after the other. They are not connected together in any way. Even if the corner coordinates are exactly the same so that they would *appear* to be next to each other, they are still processed and drawn entirely separate of each other. There is no sharing of vertices and no combined filtering at the edges. They both would have to use the same source texture - which could contain tiles, and those tiles could then appear anywhere onscreen, but they won't interact in any way. |

| ||

| Ya oops.. forgot it in the copy/paste lol I came across GL_CLAMP_TO_EDGE on my travels... it was relating to someone talking about adjacent tiles spilling over on non-integer coords and switching to GL_CLAMP_TO_EDGE worked for them...? There was also another discussion that seems relevant... Do you use nearest or linear sampling? In nearest, there shouldn't be a problem; your code looks fine. In linear, however, you end up sampling at (texel_x, texel_y), (texel_x + 1, texel_y), (texel_x, texel_y + 1) and (texel_x + 1, texel_y + 1), and do a weighted average of those 4 samples. If texel_x or texel_y is right on the edge, you can actually end up sampling from the other edge if your wrap mode is GL_REPEAT, since texel_x + 1 (for example) crosses the edge and ends up on the other side. |

| ||

| Look here: http://glprogramming.com/red/chapter09.html#name6 and then scroll down a couple of pages past the donut to "Repeating and Clamping Textures". It explains basically that when you specify a texture you can decide whether texture coordinates outside of the normal 0-to-1.0 range cause a repeating/wraparound of texture coords, or whether it clamps to the edge of the texture, ie nothing above 1.0 is allowed. Max2D automatically uses clamping, which is why it does not support `repetition` as a feature. If you were to switch repetition on, and you drew a quad with a texture map smaller than the quad, and you said to draw from 0,0 to 5,5 in texture coordinates, the texture will repeat across the quad 5 times automatically. If you don't want that, then you clamp the edge and the rightmost/bottommost texels will get streaked across the rest of the quad because all coords above 1 get clamped at 1. OpenGL has `extensions` which expand functionality. One of the extensions is the rectangular textures extension. It lets you have non-power-of-2 widths and heights for textures and comes with some new clamping modes: "Texture coordinates are clamped differently for rectangular textures. The r texture coordinate is wrapped as described above. When the texture target is TEXTURE_RECTANGLE_EXT, the s and t coordinates are wrapped as follows: CLAMP causes the s coordinate to be clamped to the range [0, ws]. CLAMP causes the t coordinate to be clamped to the range [0, hs]. CLAMP_TO_EDGE causes the s coordinate to be clamped to the range [0.5, ws-0.5]. CLAMP_TO_EDGE causes the t coordinate to be clamped to the range [0.5, hs - 0.5]." So basically clamping to the edge applies to non-power-of-2 textures where you have to shrink your texture rectangle by half a pixel in order to avoid blending with the texture border, and also rectangular textures do not have borders. I think the reason a person would see `spilling over of tiles`, is if they are using a tileset texture containing multiple tiles. They are probably trying to draw one tile from the tileset and it's either doing a `repeat` of texels from the leftmost tile on the texture, or is picking up part of the texels from an adjacent tile. The reason it might do that is because the bilinear filter will try to find out how much of half a pixel is contributing to the overall color, maybe pulling from the adjacent texels to calculate it, needing to back off the size of the tile by half a pixel so that it stops doing that. But either way, if you're thinking that this could be used to cause the bilinear filter to blend tiles together you're still running into the same issue - the tiles have to be already next to each other on the texture, and not at random positions. And it all still has to be on one texture. There is not EVER going to be any bleeding or antialiasing or blending or merging or filtering between two separate textures. |

| ||

| ok ok then LOL but all (?) post process effects shaders are applied by first rendering your scene to a texture*, and then rendering this texture as a fullscreen quad using the post processing shader (either two another texture if you intend to apply more post process effects**, or to the backbuffer). * Framebuffer Object [FBO] on newer hardware, Pixelbuffer Object [PBO] on older hardware, or render to backbuffer and copy to texture on even older hardware. I didn't realize you can render directly to a texture... that may save the screen grab in your methods? |

| ||

| Yes you can but you've gotta use some more custom code to make it work. Frame Buffer Objects are pretty nice. You bind a texture to the FBO and then it's as if you are drawing directly on the texture instead of the backbuffer. The only side effect is you can't use the texture AS the backbuffer, so when you Flip the screen you first have to draw the texture to the backbuffer. So it does remove the need to grab to the texture but it replaces it with the need to redraw the texture before flipping. ie no speed gain really, unless you're drawing to more than texture and are using them in your backbuffer scene. If you were going to grab the texture and redraw it anyway (like what Grey is doine - ie draw tiles at integer coords, grab it and redraw to float coords), then it would be faster because you don't have to do the grab, but were going to do a full redraw anyway. I just had a wild thought... something fairly easy to try.... What if you first draw a screen full of tiles at integer coordinates, and then redraw the whole screen on top of it at float coordinates? Just using normal separate quads. Would the edges now blend to the proper values which are in the backbuffer, from adjacent tiles? Or maybe if the tiles have a 1-pixel transparent border? |

| ||

| I've heard that having a 1 pixel translucent tile will cause a blend, that wouldn't be hard to add to the tiles. I already have a tileset transforming function. it will take tilesets with a margin x,y, spacing, and lines and remove them... I could just as easily add a 1 pixel translucent border... TIME... I just need time sniff |

| ||

| I've heard that having a 1 pixel translicent tile will cause a blend Doesn't that depend on the anti-aliasing method used by the video card? If you have higher anti-aliasing you might need additional border pixels? And do keep in mind that adding a small border may have a big impact on the amount of video memory needed. For example, if you have images that were 64x64 pixels before, adding an extra border pixel around the image would then make it 66x66. Internally blitzmax stores all images in video memory in the nearest power-of-two dimensions, which means that the image will take up 128x128 pixels in video memory - 4 times as much memory required. Not that big a deal, but do keep in mind what effect this might have on low end systems: you might increase your memory requirements too much for it to work properly on those machines if you are using a lot of graphics in your game. It does add up. |

| ||

| I didn't really like the idea either lol... short term excitement that I had the code to do it lol |

| ||

| What if you first draw a screen full of tiles at integer coordinates, and then redraw the whole screen on top of it at float coordinates? |

| ||

| For example, if you have images that were 64x64 pixels before, adding an extra border pixel around the image would then make it 66x66 I just make sure my sprites come in under power of 2 dimensions INCLUDING the 1 pixel border. |

| ||

| Here is proof that my wild idea works... Basically it draws all tiles at integer coords first in SOLIDBLEND with a slight offset of 2 pixels, then you draw the same tiles at float coords right on top of it without the offset but in ALPHABLEND. Nothing else needed, no polygon smoothing, no texture grabbing. No artefacts. :-) The KEY, is that you MUST have a 1 pixel border around each tile image, and that border MUST contain duplicate pixels from the *inside* of the tile, but with an alpha value of 0. You can't just have black as the RGB value and 0 as the alpha value. When I first tried this I added a simple empty canvas extension around the tile, which I presume meant the alpha was 0 and that the color of those pixels didn't matter. But it DOES matter. What I discovered is that the hardware does a bilinear filter *first* between the tile pixel and the normal color value of the edge pixel's value (ie 0 if it's black, regardless of the alpha). This produces a mid gray if the tile is white. Then it blends the result (gray) with the background based on coverage. I was not expecting that! So to overcome it, you have to have a duplicate of the tile pixels all around the inside edge of the tile image (taken from the same tile). Then at the corners, the four corner pixels, I just copied the pixel from the corners of the tile image. The alpha still has to be 0 but the rgb must be a valid color. (actually in the above demo I didn't use images, I just generated pixels, so the values of the border pixels are close but not exact copies - but it looks okay for an easy demo). This causes it to a) bilinear filter between the real tile's edge pixels and itself (which cancels out the effect of the bilinear filter) and then it blends with the background. Produces a perfect antialiased edge! I think the `filter first, blend with background second` explains, Gray, why you were getting gray pixels inbetween tiles. The gray comes from the fact that your tile is surrounded by black pixels, ie $000000, with $00 as alpha. The bilinear filter takes into account the RGB values of the border and NOT what the RGB would be after alphablending! So if you put realistic pixel color values into the border, and set the border's alpha values to 0, it blends right! A couple of downsides to this technique - a) requires drawing all tiles twice, b) you gotta set up the tiles with the borders yourself which may reduce your tile size to like 62x62 if you don't want the 4x memory usage effect, c) not too easy to do multiple layers in your tilemap because you'd need to draw the integer set of tiles in alphablend and then you'd be alphablending over alphablend - maybe requires some kind of weird workaround? Or who knows, maybe layers works okay :-D Note that when scrolling relatively slowly, no matter what kind of antialiasing/float coord technique you use, tiles that overlap partial pixels can only show their amount of overlap as a fade of the total pixel color. This fade sort of makes edges look slightly blurred. But that's totally normal - it's a side effect of not having enough pixel resolution, and nothing you can do about it other than - run in a higher resolution screen. |

| ||

| Fascinating, it does appear to work! Good stuff. What about when you zoom in? |

| ||

| Mmm... good point. The first set of tiles has to be drawn at integer coords and the edges must lie on integer coords, soooo.. um.... if you wanted to zoom, you could either: a) draw to integer coords and draw the float coord ones on top, grab it to texture, and redraw that texture zoomed. b) draw to integer coords at 2 x resolution, grab into 4 textures or 4 parts of a large texture, then redraw that texture zoomed. You can't use the projection matrix for drawing the tiles except at zoom factors which are integers, but you could use it if you grab and redraw. You could zoom x2 x3 x4 x5 etc so long as it's a whole amount so that the tile edges are on integer boundaries. You couldn't draw the tiles at, say a zoom of 1.4. But after a grab - or render to texture, you could. Notice in your previous example, Grey, that if you were to draw to integer coords and grab to a texture and then draw at a fractial coord, you might have a gap on the trailing edges of the screen because there's no pixels in the texture to put there... a slight problem. With this new technique you don't have the problem because the edge is already filled with pixels from the first tile pass. I suppose you could draw some extra tiles or something to fill the gap on the old method? Or grab to a larger texture, or have a 1-pixel black border around the edge of the screen which you draw after drawing the grabbed image. |

| ||

| Yeah old skool is having a black border round the game that is the same width as the max scroll speed :-) |

| ||

| Cripes, lol. Well you gotta do what you gotta do. Now we just gotta find some use for all this magic. |

| ||

| Wow, nice workaround! I'm currently working on a simple and basic tile engine to run snes zelda like games... and i had given up hopes for that artifact problem.. but at least now you have a partial solution to the problem.. :) |

| ||

| I'm definitely going to be able to utilize these findings in TileMax... I'm actually considering releasing the code... its just that there is NO documentation, its NOT finished, and NOT well commented (although I use explanatory variable/type names. Honestly, I think I need to go back to 3D... I'm not an artist but I can build a half-decent model and animate it... not so good with 2D graphics, other than pushing pre-drawn stuff around ;) |

| ||

| Do what you love. :-) |

| ||

| Publicly? lol |

| ||

| Do what you love. :-) The inside of my "life is good" cap says "Do what you like, like what you do". |

| ||

| You're so positive, Grey ;-) |

| ||

| Thanks. Well I look at it as that whilst we are alive, we have a choice: enjoy it or feel crap. I'd prefer to enjoy it personally, although clearly not everyone thinks the same as me (or doesn't yet know that it *is* possible to enjoy it). |

| ||

| 100% agree... I'd rather have smile lines than grumpy ones lol All too often people focus on the negatives... the problem with that is that opportunity passes them by because they are not looking for the positives... which is where opportunity comes from (usually). |

| ||

| Did you ever notice that if you choose to be grateful first, even in the seeming absence of things to be grateful for, that things come along to match your gratitude? |

| ||

| No not really TBH... but being positive will generally cause those around you to be more positive through transference... problem is that negativity is more powerful... so one grumpy old sod can ruin the fun |

| ||

| BAH HUMBUG AND FIDDLESTICKS. |

| ||

| LOL... do you have to use such foul language ;) |

| ||

| Did you ever notice that if you choose to be grateful first, even in the seeming absence of things to be grateful for, that things come along to match your gratitude? Yep I sure did, and I use it all the time. |

| ||

| I always try to be gracious in spirit, bestowing on those I don't know a positive energy so to speak. But, sadly, not always. I've been all over the world including Spain, the Gulf, China, Japan, and even such places like Port Said and Ethiopia during my 14 years in the Canadian military. So I most definitely am grateful for being born in Canada living the life that I do. But my perspectives over time creep closer to home, and those distant visions get clouded by immediate problems. Its easy to slide into this zone when you are fatigued, feeling a little beaten down etc... The important factor is to pull your socks up when you get that time to appreciate what you have, and who you have around you. Last note, wow has this thread slipped from its topic LOL |

| ||

| :-) Hey at least it's one of very few threads where people's ego's aren't battling with each other. See how cooperative we can be :-) |

| ||

| Wise words _Skully. Yes I feel the mind is like a garden. Weeds grow naturally and you have to pull them out and replace them with flowers. It's an ongoing process that you have to keep working at, but the rewards are fantastic. |

| ||

| Thats a great analogy Grey Alien. I'm picturing fractal weeds and flowers wrapped in the neurons of the mind... weeds growing from negative thoughts and flowers from positive ones... the weeds wrapping around the positive thoughts like vines, choking them off... Uh oh... game Idea... must write down or suppress ;) |

| ||

| In OpenGL 1.2 (or using extensions, there is the edge texture clamping thing, which supposedly *might* remove the combination of a black border with a regular-sized tile pixel ... but not sure if it would fix the edge jumping thing.. "Texture Coordinate Edge Clamping addresses a problem with filtering at the edges of textures. When you select GL_CLAMP as your texture wrap mode and use a linear filtering mode, the border will get sampled along with edge texels. Texture coordinate edge clamping causes only the texels which are actually part of the texture to be sampled. This corresponds to the SGIS_texture_edge_clamp extension (which normally shows up as EXT_texture_edge_clamp in the GL_EXTENSIONS string). " |

| ||

| I think therein lays the problem... OpenGL implementation in Max2D doesn't use extensions (yet?) |

| ||

| Lol - make a mesh with shared vertices - honest guv! STOP thinking 2D when you are dealing with a 3D rendering engine. |

| ||

| Lol - make a mesh with shared vertices - honest guv! STOP thinking 2D rendering when you are dealing with a 3D rendering engine. DON'T waste vertices or texture space. |

| ||

| Did you read the thread? lol |

| ||

| I did for a bit - my bad. I read from the bottom up and the same questions came up. I'll go hide again - sorry. |

| ||

| I didn't read this whole thread but but I found a little trick that's not perfect but looks pretty good... what you do is pull in the edges of the texture just a bit. .002 pixels seems to work well. use the cursor keys to move, highlighting the issue. press c to fix.  |

| ||

| very interesting hotfix :) |

| ||

| dmaz: Interesting "fix". It certainly stops the weird gaps appearing between tiles when moving and scaling but when the fix is on the tile joins wiggle a lot. We managed to eliminate that wiggling with some of the code higher up but it required drawing everything onto a texture in VRAM first. |

| ||