Please test my Jitter Correction timing

BlitzMax Forums/BlitzMax Programming/Please test my Jitter Correction timing

| ||

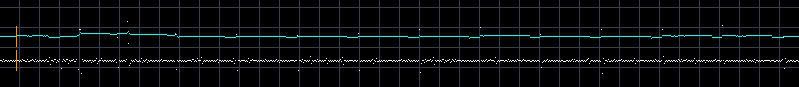

| ***[EDIT]*** New version uploaded. Allows you to change from DirectX to OpenGL. Also displays the current FPS and slowest FPS. Full-screen now fixed to 60Hz. Hi, inspired by recent threads by Amon and HrdNutz (and a recent post by sswift), I've been working on my framework timing code. I've basically added in some jitter correction code which takes an average of the last 50 frames to smooth out minor jitters (and it works). By jitter, I mean the occasional minor shaking of moving sprites caused by the "moire" effect of the integer-based Millisecs() counter. It also has an extra bonus that if your system has background tasks that interfere, you'll still see a jerk but it won't be as bad because the timing code will no longer "catch up" all in one go, it'll catch up over the next 50 frames, thus resulting in a smaller jerk.  Please test it for me (PC only) and list system specs if you feel like being helpful ;-) http://www.greyaliengames.com/misc/jitter.zip (631k) *** If anyone wants to show me a graph and doesn't have webspace, then just email it to me. Click my profile and use that email address... *** Few things to note: - The top set of sprites do not have any timing code. They move by exactly 2 pixels per frame, and thus will appear extremely smooth ("arcade smooth") BUT if you turn VSync off with V they'll go mega fast. Also if you go into windowed mode and your desktop runs at a faster HZ refresh rate than full screen mode (my windowed mode is 85Hz), the sprites will move faster. Smooth as it may appear, this sort of "no timing" method is not suitable for commercial games. - the second and third sets of sprites, and the UFO, move using my special timing code which is running at 120Hz. My code now also has jitter correction. - The 2nd/3rd set of sprites move at 1 pixel per logic frame (120Hz) although you can change the speed with + and -. Because 120Hz is double full-screen 60Hz (and windowed mode 60Hz on many laptops/TFTs) it should look pretty smooth, but with the occasional consistent glitch due to millisecs rounding every second or so. - If you change the speed to something other than 1, 0.5, 1.5 or 2, you'll see the sprites jittering a bit. This is because they are no longer moving by a whole number of pixels per frame. This is unavoidable if you want to have things moving at say 1.3 pixels per frame (which you will do at some point!). - When the speed is not 1.0, note how the ovals moving at integer coords appear more jerky than the ovals moving at floating point coords. This is because Bmax is able to draw ovals (and sprites) at sub-pixel positions. However, the vertical line drawn at floating point coords is still jerky because DrawLine obviously doesn't have sub-pixel support. - If you switch Jitter Correction off with J (and the speed is 1.0), you may see some weird minor "jitters" occasionally. - V will switch off vsync. Aside from a bit of vertical tearing occasioanlly, the timed sprites will move at the same speed. - F will toggle full-screen on off. What looks smooth in full screen may not in windowed mode if your desktop Hz is not 60Hz (e.g. if you have a CRT not a TFT). ***graphs*** - Note how my graph is totally smooth. Is yours? If not you have background tasks interfering with BMax and will get jerks. However, it is NORMAL for the graph to be a bit unsteady just after the app loads (or when you change from full-creen to windowed mode), but hopefully it should be steady after one pass if your system is well optimised. - Finally, if you are not totally "techied" out by the above, please checkout the graphs at the bottom. The white line is the time in millisecs between each frame. Most of the time it should move up or down by one pixel (e.g. 16/17 in full-screen mode at 60Hz). If you see this move by more than one pixel then a background task has interfered! You may see ALL the sprites jerk at the same time. - The blue line is the averaged, jitter corrected, number of logic ticks per frame (floating point value). If you turn off jitter correction with J, the blue line becomes rough and any jerks caused by the system will appear worse for the sprites with timing than for the no-timing sprites at the top. - You can simulate a background task interfering by a) pressing D for a 50ms Delay, or by b) clicking the title bar in windowed mode. Oh, as well as my main PC, I've tested this on a Macbook Pro and a laptop with a crappy video card. One of the important things about the test was to see if any big jerks were caused by my timing code (as was implied by some other people, but which I was sure wasn't the case) or if the jerks affected ALL sprites including those with no timing - which proves to be the case! Although it is true that jerks were magnified by my previous timing code, but are now smoothed out with the new jitter correction code. Hope you find this app and thread useful in explaining some things about timing, no timing, integer/floating point coords, vsync, jerks, jittering etc :-) Because I'm practically jittering from sitting at my PC all day sorting this out... |

| ||

| ()I have an AMD Athlon 64 X2 Dual-Core 4600+ processor, two gigs of RAM and an NViDia GeForce 7900GS. ()The non-timed sprite was smooth at all times, as far as I could tell. ()The Timed+Integer sprite had minor jiggling problems which seemed to be cured by having the jitter correction on. ()The Timed+Float sprites never seemed to have any visual jitter at all, no matter what I did with jitter correction. ()My white line was never smooth, but my blue line was, as long as jitter correction was on. The white line was up-and-down one or two pixels at a time on every single update. I did have approximately 6,000 things open in the background while I was running the tests. The above all held true even when I cranked the speed way up. While I'm thinking about it, please consider seperating the KeyHit() and KeyDown() arrays in the next version of your framework. I have run into frustration several times when it would have been useful to be able to check if a key has been freshly hit without clearing it's state. I can't even work around this by setting the Keys[WHATEVER_KEY] back to 1 after using KeyHit(), because then it triggers KeyHit() again the next frame. SpaceAce |

| ||

| SpaceAce: Glad to hear it worked on your PC well. Yeah the white line will jiggle by 1-2 pixels like in my screen shot but the blue line should be smooth with JC on. Thanks for testing. Regarding the adding a KeyState Array - thanks for the suggestion. I've put it on the to do list. When I first coded this I wondered if anyone would want that, guess I shoulda done it then ;-) I have to think carefully if it will break anyone's existing code, but hopefully it won't. Meanwhile you can always query the array directly with Keys[WHATEVER_KEY] instead of using KeyHit and then decide if you want to clear it yourself or not. |

| ||

| SpaceAce: Just added a KeyStates[] array for you. Tested existing code and nothing seems broke. |

| ||

| This works fine on my system. Do we get it as an update for the framework or as an update to the current exampleTiming? Is it for sale? Or will you make the code freely available? |

| ||

| You'll get it with the next framework upgrade :-) I'll send you it early if you like. |

| ||

| Yeah, I'd like to mess with it now. Send away :) |

| ||

| Jitter thing works on my system Grey, jittered a bit without it on especially in windowed mode. Can't wait for the framework update. specs - athlon xp2500, 1gb ram, geforce 6600gt. Mike |

| ||

| Meanwhile you can always query the array directly with Keys[WHATEVER_KEY] instead of using KeyHit and then decide if you want to clear it yourself or not. The problems I am having wouldn't be solved by doing that. I want to be able to tell the difference between a hit and a hold. In short, I just want to get the same behavior I do from the built-in BRL KeyHit() and KeyDown() functions, where the click is registered once then KeyHit() is false and KeyDown() is true as long as the key is held. I guess I could track this manually by doing something like this:

If (KeyDown(KEY_G) And Not KeyDownLastFrame[KEY_G])

// The key has been clicked since the last frame, so do something

End If

KeyDownLastFrame[KEY_G] = Keys[KEY_G]

However, I think tracking them in seperate arrays should have the same effect. SpaceAce: Just added a KeyStates[] array for you. Tested existing code and nothing seems broke Cool, is this linked to KeyHit() now or is it just an array for direct access? SpaceAce |

| ||

| No problems here. Non-timed sprite and the UFO do not appear to be jittery at all. Timed integer sprite showed a very minute amount of jitter only at 1.0 and up to 1.3 speed. After that I was not able to see any jitter. Intel Core2Duo at 2.4 GHz, 2 GB RAM, ATI X1950XTX (512 MB) Barney |

| ||

| Jitter only happened when I turned bother Jitter and Verticle sync off. Intel Celeron M 1.4 GHZ Intel 915 GM/GMS, 910GML Graphics card |

| ||

| Great thanks for testing everyone! Glad to see it working on a variety of gfx cards and CPUs too! SpaceAce: replied to your question via email. Basically yeah it's done. |

| ||

| Sorry grey, it's jittering a LOT on my pc :( Just kidding :) |

| ||

| Grey: about every 2 seconds there is a noticeable jump or hiccup on both my computers. my nvidia 8800 machine has both line smooth with no deviation (even when there is the hiccup) while my ati 850 has a lot of background so the blue line has about 4 bumps on it every time through. it doesn't seem to happen as often when in windowed mode. also when the jump occurs there is not any noticeable effect on the lines but everything including the lines do the jump. the anti-jitter looks great though. |

| ||

| Works good for me :P even though i notice little difference, the line is definitely smoother and very consistent. Grats man :D |

| ||

| Lowcs: haha nearly got me. Dmaz: Thanks for testing! When you see the jump does it occur to the top set of sprites too which have no timing? If it occurs to ALL sprites then it's something on the PC interfering with Bmax, but it would be weird to be on both PCs. If it only occurs to the timed sprites and UFO then it's my timing code (I don't mena a bug, I mean a natural rounding error sort of thing). If it glitches by a single pixel every second or so and it's regular then it's this: Because 120Hz is double full-screen 60Hz (and windowed mode 60Hz on many laptops/TFTs) it should look pretty smooth, but with the occasional consistent glitch due to millisecs rounding every second or so. If the glitch is bigger than 1 pixel then I'm not sure what it is. The graphs at the bottom look smooth right? Regarding windowed mode, are you running a TFT or CRT? This will affect the desktop Hz. Any futher info? HrdNutz: Great! Thanks for you inspiration. |

| ||

| I'm getting a jitter about every second. Just like the tests you had before. I just ran the DxDiag program. I get jitters on it when testing DirectX 7, but none with DX 8 or DX 9, so this must be a DirectX issue. I'm thinking I did upgrade my video drivers when I bought my new monitor, possibly the source of my problems. In case you don't remember, my system specs: AMD Athalon XP 2800+ 2.1GHz NVidia GeForce FX 5200 Windows XP Pro SP2 |

| ||

| Everyone: new version uploaded, please check the "Edit" notes at the top of the orignal post at the top. I've also made sure full-screen is 60Hz whereas it may not have been before. So please try it again and see if it's different. TomToad: thanks for testing. Well I uploaded a new version for you to test (if you would be so kind). You can change to OpenGL with G - are the jitters gone? Also it displays the current FPS on the screen. In full-screen, what is your Hz please? It may not have been running at 60Hz before but I've locked it to that. When you say you are getting a jitter every second, is this on ALL sprites, even the no timing ones, or just the timing ones? How does the UFO look, does it jitter? What about in windowed mode (worse/better)? Do you notice the jitter if you alter the speed up or down a notch (so it's not 1)? How did the graph look? When you got a jitter, did the white graph have a pixel quite far out of place (indicating a background task intering) or not? The blue one was probably smooth. Thanks. Sorry to ask so many questions, but I like detail :-) When you say you ran DXDiag and ran with DX7, 8, and 9 how are you doing that exactly? Perhaps the jitter you are seeing is the DX Lag fix in action which is needed for DX7 to prevent render lag...? |

| ||

| Just tweaked HrdNutz render tweening code to make a line and oval move at 1 pixel per frame (60 per second) and the screen to refresh at 60Hz to see what it does. It has a jitter every second or so on my PC. when I say jitter, the line wobbles for a couple of frames then is OK. This is just rounding errors that you can't avoid. My code smooths out those jitters so there should only be a single jitter (one frame only) about every second or so. Is that what people are seeing and calling jitters or is it something bigger/worse? Thanx |

| ||

| I still get the jitter, oddly I've got the same GFX card as TomToad. My Breakdown :- FullScreen : Silkysmooth, no jitter in all modes. Windowed : Jitter in all modes even when graph shows smooth. After a couple of minutes the Jitter correction looks like it makes things worse. When I load the CPU (see below) the whole thing falls to bits and begins to react like fixed step timing. You should implement some error checking code that caps the current delta at +/- 10% of the average delta thus prevening the cache filling up with 50ms frametimes :)  |

| ||

It jitters on all sprites with both OpenGL and DirectX. However I did notice that the jitters are diminished a bit with the jitter correction on as opposed to off, but still jitters somewhat. Notice how the white line jumps at regular intervals? But then it drops way low for a frame after that. Also the blue line is a pixel high for quite some time after each jump. Fullscreen is running 59-60 fps As for DXDiag, when you go to the Display tab and run Test Direct3D, it draws a spinning cube three times, once for DX7, DX8, and DX9. I see jitter at the same intervals on the DX7 test, but it's gone on the DX8 and DX9 test. Also the Direct Draw test jitters, there's only a DX 7 test for that since DD was removed in DirectX 8. I know it can't be a DX only issue because BMax use to work just fine. It changed somehwere within the last 3-6 months. The only changes I made were, new monitor, new CPU, new video drivers, and updated BMax. I can't test the monitor because the old monitor is gone. My old CPU is lost so I can't test that. I already tried an older version of BMax and ruled that out. Now I just need to try downgrading my video drivers to see if I can rule that out. |

| ||

| I'll run the new test on my systems when I get home tonight... my hiccups do not look like Tom's as they are smooth even when the hiccup happens. I do use DVI LCD. |

| ||

| Just tried downgrading my drivers. Had no effect. My monitor is an LCD, but am using analog input. |

| ||

| I don't think it's your timing code Grey. With the DirectX driver everything was jerking quite noticeably but when I switched to OpenGL I saw the smoothest movement I've ever seen in any game I've played. I mean I was really stunned by how smoothly the UFO was moving. All this time I though background tasks were screwing up my games and it was the DX driver all along. Even the white line settled down under OpenGL. Under DirectX my lines look just like the ones TomTom posted above, Under OpenGL they look like the ones in your screen shot. Actually my white line is even smoother than yours. I recently had to downgrade my graphics card to my old Radeon 7000 when my 9550 failed but I have the latest Catalyst drivers installed. WinXp Home SP2: Athlon XP 1600, Radeon 7000 64MB, DirectX 9c |

| ||

| Wow, thanks all some very interesting test results. @Indiepath: yeah I already clamp to 100ms (you can see it on your white line in some places) and I ignore the first few values after the screen mode is changed, but I may need to clamp to much closer values to the average as you suggest. The thing is, I can't start using an average right at the beginning because the system has to settle so I'll have to wait until the buffer is full first - but that's OK I can sort that. Actually, at the moment with the blue line going up and back down, it means that the sprite will move exactly the same distance over 1 second, even with all the CPU activity going on; this is intentional - it compensates over time rather than in one big jerk or not all. But if I clamp it to within 10% of the average, then time will be lost and the sprite won't move as far over 1 second. Also any game timers (like 60 secs to complete a level) will run slow. swings and proverbials... Another thing, if someone overloads their CPU like you have then what are they doing anyway? They are obviously not properly playing the game, plus they wouldn't do it in full-screen mode. @Tom Toad: Thanks for posting the graph. That makes it very clear. So you get regular jerks in OpenGL and DX! Yes I've seen that one high frame and one low frame straight after before. It must be certain video cards or background tasks but I don't know what yet. I may try DX9 and see if that improves things. The blue line is supposed to go up a little bit for a while after the peak so that it maintains proper elapsed time in the game world. Without jitter correction, the blue line would shoot up then down and your jitters would look worse! (in fact you said that was the case). Hmm I'd love to get to the bottom of this, but I'm not sure I can work magic with my code if something on the system is doing it. Gah! @dmaz: Thanks look forward to the results. Interesting that you are using DVI as another form member, Quicksilva, reported jerks since using that but siad they weren't there when using an analogue cable. Do you have an analogue cable you could compare with? @CGV: Thanks! Glad you thought it was smooth. So even though other people get the same jerking in DX and OpenGL, for you it was DX only, weird. Another interesting test result and glad you found out something useful. You see on my PC it's smooth in BOTH drivers and full-screen/windowed mode but sadly all PCs are not equal in their performance. |

| ||

| *** If anyone wants to show me a graph and doesn't have webspace, then just email it to me. Click my profile and use that email address... *** |

| ||

| You just need to keep those deltas as constant as possible, the driver implementation is not really in your hands. Given bad enough spikes, even averaging deltas will jump around. Your timing code needs to be smart (not just clamp numbers) - you need to treat possible spiky deltas before you feed them to the buffer - need to identify a DT as a spike or not :P You can also possibly imlpement double buffering - one that stores and averages deltas every frame, and one that adds the average value from the first buffer every time it fills up, then use the average from the second buffer as your DT. Or you could just simply give some time at the beginning (after things are loaded and started drawing, like a 'Get Ready' screen for a few seconds) to fill up the delta buffer, and store that average value to use for the rest of the 'level' execution (would need to use real delta for other screens). Just make sure you treat those spiky deltas with some extra logic and averaging should be sufficient enough. If you want to look into equalization and noise reduction algorithms you might achieve better results :P |

| ||

| yeah I was thinking about a warm up cycle earlier today. Basically run a black screen for 10-20 frames to allow startup spikes (and troughs) to settle, then record for say 50 frames but not apply clamping, just use averaging. Then after 50 frames I would have some good data to use to see if a delta MS was out of the ordinary and then I could bring it back into range (or just use an average value instead). The double buffer idea is interesting. At the moment I add the ms delta to the buffer and then read the whole buffer for an average, but perhaps I should only add the *average* back to the buffer. This would mean that spikes wouldn't affect the average so much... I was looking into different drivers in case DX 9 helps to remove spikes. If so it may be worth me auto-switching to it to get stuff looking smoother on a wider range of PCs. It's a bit sad when someone has a great modern setup but they get bad jitters due to something outside the control of my code. Sometimes those people think the code/game is to blame, not their drivers/system setup, which is a shame. So HrdNutz, you've appeared on this forum very recently but seem to know a good amount about all this stuff, it's been very interesting communicating with you. What's your background? |

| ||

| Yes, double or tripple buffering will help with smoothing out the delta. Only thing you want to keep in mind is that buffer size should be relative to time - a 50 frame buffer at 1000fps = .2 seconds, and at 60 fps is almost a whole second. You should also try equalizing the spikes within buffers; add your raw deltas to a buffer, find the average, loop through the same buffer again clamping anything that's above a sertain % of that average, then recalculate the average of that buffer again - basically try to reject any big spiky deltas. Oh, and I have computer science background, and hobby game development since I was little :P |

| ||

| I have found the source of my problems! It is with System Suite 7. I had upgraded it a few months ago and forgot all about it. Part of system suite is to monitor applications when they run and try to prevent crashes from happening. It seems to interfere with graphics programs by creating a short (~30ms) delay every second. By going to task manager and ending MXTask.exe process, the graphics now run smooth. So you might want to ask others who experience a glitch at regular intervals if they have System Suite on their system, or some other VCOM software such as Fix-it Utilities. Seems that System Suite is causing problems with a lot of programs lately. Might be time to find something else. |

| ||

| Another thing, if someone overloads their CPU like you have then what are they doing anyway? They are obviously not properly playing the game, plus they wouldn't do it in full-screen mode. unless it's an automated process like backup or virus checking. |

| ||

| TomToad: Hey that's great you found out what the problem was with! well done. Indiepath: good point. So the question is should I clamp spikes for more consistent visual game speed and "loose time" or do what I'm currently doing which is add the spikes into the buffer and let them be averaged out over the next batch of frames, thus not loosing any time? Still could be worth trying DX9. But I've already spent too long on this, need to get back on with my game! :-) |

| ||

| Probably a bit of both for best results. something along these lines ... not a working code, just an idea ' buff = array of ints to store deltas ' buffsize = size of the array ' code assumes that buff[] is already populated with deltas 'calculate average first average = 0 For i = 0 To buffsize - 1 average:+ buff[i] Next average:/ buffsize ' buffsize cannot be 0 (zero) ' equalize the spike a bit, ' probably should be done only when the buffer is full For i = 0 To buffsize - 1 ' move anything above or below agerage closer by 1/3 distance from it vec = buff[i]+(average - buff[i]) / 3 buff[i] = vec Next ' recalculate the average again (spikes should be a bit better) average = 0 For i = 0 To buffsize - 1 average:+ buff[i] Next average:/ buffsize Cheers, Dima |

| ||

| ok, the hiccup is still there... it happens in dx and opengl. it occurs no matter how smooth both lines are. (I stopped most processes on 2 different computers so hardly anything would be running. I tried using an VGA cable instead of the DVI... still there. yes, the straight sprite without the timing code does it. I now tried it on more windows computers(3 diff video cards)... it does it even using this code Graphics 800,600,32,60 Local x:Int While Not KeyDown(KEY_ESCAPE) Cls x :+ 4 If x > 800 Then x = 0 DrawOval x,50,32,32 Flip Wend all of them so far have the problem. we'll see how this goes on my mac tonight the "hiccup" seems to be a displacement ahead of the motion and it seems to be the same distance as the motion. almost like you have a triple buffer and at one point it (when flip is called) it accidentally shows the "last drawn buffer" instead of the second to last drawn buffer. but since it happens in opengl too I think we can rule that out. I was thinking it could be LCD itself but now I've tried it on a VGA monitor with the same results (I think, that computer was under powered so I might try that again) ok, Blitz3D does it too... I swear when we went through this a year ago B3D didn't have this issue. aaaa! |

| ||

| hrdnutz: Cool. I could probably implement the spike smoothing pretty quickly but I don't really have time right now as I've got 200+ hours of coding to complete in 4.5 weeks. So I'll bookmark this thread and come back to it. Dmaz: Thanks for testing again. I think the problem must be in your brain or eyes ;) kidding. It's Good to know it happens to the normal sprite with no timing and in DX/GL as that proves it's not my timing code (as does your test app). Whats crazy is you've tried it on 3+ PCs and it's still there, wow? Is there a common piece of software? Do they hae a common brand of video card (Nvidea) or commmon CPU (dual core AMD) I wonder? I really doubt the mac will have the problem, it's totally smooth on my mac book pro although it does seem to have vertical tearing even with flip 1. I'll be asking BRL if this is a bug in due course when I totally convert the framework to Mac. Is the itter you get regular? Is it worse with jitter correction off? Tomtoad found out what it was on his PC, maybe we'll be able to work out what it is on our PCs. You've been very thorough already though with VGA/DVI and TFT/CRT etc and stopping processes AND testing in B3D! |

| ||

| well, perfectly smooth on the mac tested on the 2 pcs list in my sig and 1 at work. I tested on both an LCD and a CRT. yes, the jitter is very regular.... once every 3 sec on my new computer and once every 4 on my old one. the jitter is there whether your jitter correction is on or off... both my machines show smooth lines in your test. no common hardware amd/ati, intel/nvidia, intel/intel(onboard) |

| ||

| update... I have now confirmed that on both my computers, it's entirely dependent on what screen resolution the test is running at. my new computer: (intel/nvidia) 'Graphics 640,480,32,60 'every 14s 'Graphics 720,480,32,60 'every 8s 'Graphics 720,576,32,60 'every 3s 'Graphics 800,600,32,60 'every 3s 'Graphics 848,480,32,60 'not sure 'Graphics 960,600,32,60 'no jitter 'Graphics 1024,768,32,60 'no jitter 'Graphics 1152,864,32,60 'no jitter 'Graphics 1280,960,32,60 'no jitter 'Graphics 1280,1024,32,60 'no jitter 'Graphics 1360,768,32,60 'no jitter 'Graphics 1600,1200,32,60 'no jitter my old computer: (amd/ati) 'Graphics 400,300,32,60 'no jitter 'Graphics 512,384,32,60 'no jitter 'Graphics 640,480,32,60 'every 7s 'Graphics 720,480,32,60 'no jitter 'Graphics 720,576,32,60 'no jitter 'Graphics 800,600,32,60 'every 5s 'Graphics 848,480,32,60 'no jitter 'Graphics 960,720,32,60 'no jitter 'Graphics 1024,480,32,60 'no jitter 'Graphics 1024,640,32,60 'no jitter 'Graphics 1024,768,32,60 'every 12s 'Graphics 1152,864,32,60 'no jitter 'Graphics 1280,720,32,60 'no jitter 'Graphics 1280,768,32,60 'no jitter 'Graphics 1280,800,32,60 'no jitter 'Graphics 1280,960,32,60 'no jitter 'Graphics 1280,1024,32,60 'no jitter 'Graphics 1360,768,32,60 'no jitter 'Graphics 1600,1200,32,60 'no jittercould it be my Dell 2001 LCD monitor? well, I also saw the problem at work on a newer samsung and a CRT...? |

| ||

| wow very thorough thanks Dmaz! :-) Hmm the plot thickens. Must be down to how the cards draw and DirectX I guess or did you also get the jitter in OpenGL, I can't remember? I see your PC stats ar completely different. (nice PCs btw) Is the jitter a big jerk (like time was lost) or a tiny shift, say a pixel or so (rounding error)? |

| ||

| yes, I did get the jitter in opengl too. the jitter looks like the "hiccup" seems to be a displacement ahead of the motion and it seems to be the same distance as the motion. almost like you have a triple buffer and at one point it (when flip is called) it accidentally shows the "last drawn buffer" instead of the second to last drawn buffer. but since it happens in opengl too I think we can rule that out. so I don't think it's a rounding error... for some reason I feel like it's the lcd??? |

| ||

| Ah OK, thanks for clarification on the jitter. Yeah that sounds like what happens when a spike is caused by another process (although you are not seeing it on the graph). It can't be the TFT as you tested on CRT. Anyway, I'll just have to accept that totally smooth graphics are not possible on all PCs :-( |

| ||

| wow, I had similar debates, discussions about this years ago. The answer? There is no answer. Jitter is a fact of life on the PC, today's PCs more than ever. Years ago, you could pretty much guarantee your game would be the only app running, so it was actually easier to get consistant results across a variety of PCs. Today's PCs are bloated with background tasks like virus checkers, security alerts, auto feeds, internet connections, etc. This all competes with your app for cpu cycles. On the PC, DX8-9 do a much better job of giving your app cycles compared to DX7. The bottom line is a little jitter or hiccup here and there is really nothing to worry about. Typical end users, especially the "casual game" type, are not even remotely phased by this. But get a few dozen tech heads examining a ball going back and forth and we start zooming in on the screen, looking for the slightest jitter and think "ah ha!! jitter..jitter!! I see jitter!!!" :) lol.. Grey's demo works great on all 3 of my test machines. Sure there's the occasional jitter, skip, hiccup, etc. But this is MY computer's fault, not BlitzMax or GA's code. :) |

| ||

| thanks MGE Developer. Yeah I've fone the best I can to smooth it all out, and if your PC is non-bloated it works great, otherwise it's the best that can be done with DX7. I was considering auto-switching to DX9 if it's available though... |

| ||

| Hi GA, if you use DX9 for graphics, wouldn't input still be handled via DX7? Mixing DX is never a good idea. But overall I wouldn't even worry about. You sell alot of games from your website, are you getting feedback from your BlitzMax games from users saying "it's too jittery?" I'd be shocked if you said it was a high number??? |

| ||

| I only get jitter feedback from forum members, never people who buy my games but then it may be that a certain % don't buy because they are jittery and never let me know. Although to be honest, jitter isn't an issue in casual games due to the nature of the graphics etc, it's more of an issue with arcade games that *should* look smooth like scrolling games or with moving enemies. |

| ||

| If you had a stable problem with your jitters you would hear about it from your game downloaders. ;) For me personally, I consider typical games like Pacman, Space Invaders to be casual type games as well. ;) One thing I have found in the past few days is, alot of BlitzMax coders seem to develop with very high spec machines. And when they run their game on other end user's computers.... yep... you guessed it. Bullet Candy for instance, very popular with the forum and from end users, but runs very slow on 2 of my machines. I'd say no faster than 5-10 fps. So if your game has hundreds (1000's??) of sprites, etc, running it on a bloated machine will only make the jitter factor worse. "I only get jitter feedback from forum members.." Yep, at first I was examining all the pixels in your demo, hunting for jitters and then I was like, "wtf am I doing? Any typical end user wouldn't even notice or care about this!!" :) lol... |

| ||

| Actually I've noticed a lot of casual games run at a low frame rate, say 30FPS. You can see this even in big name games like Hidden Expedition Everest. At such a low framerate jitters are disguised better. My aim you see was to run at a high FPS for lovely smooth effects, and on the right machine it's great, but on the wrong machine any jitter is more more noticeable. Basically I wanted my framework to feel more "arcadey" so that my games have that extra level of smoothness and polish. Many people on Indiegamer commented on how smooth my last games were. |

| ||

| Yep, 30fps is the norm pretty much for the casual coders. Arcade quality movement isn't always about fast frame rates. A STABLE 20 or 30fps is usually preferred over faster frame rates that suffer from slow down or jitters. Achieving that "smooth as butter" look to your animation is really only stable on the better graphics cards. CPU isn't as important as GPU for most games. Note: The smaller the sprite, the more noticable the jitter. Using bigger sprites you can get away with slower frame rates. Small sprites look amazing when they render at 1 vsync. ;) Classic games like Metal Slug for instance only run at 30fps on the SNK arcade hardware. Alot of PS3, Xbox games still suffer from slow down and run at 20-30fps occasionally. "My aim you see was to run at a high FPS for lovely smooth effects, and on the right machine it's great.." You've already achieved that on your end. The rest is up to the end user's machine specs. It all boils down to the hardware on the box. And we as devs can't control that. ;) |

| ||

| Yep, so I may consider putting in a render limited to my framework to lock it to 30 FPS at some point. I may also run the logic for my future games a lot less than 200FPS, maybe 60FPS and render at 30FPS. |

| ||

| I know alot of coders swear by "logic at a fixed rate, render speed fluctuates" but I've never caught on to that bandwagon. Running logic faster than the frame rate just doesn't compute for me personally. I'm too old school I guess. lol. If my game is running at 30fps, then my logic is as well. ;) |

| ||

| physics and collision can have problems at that rate, the movement distances can start to have problems due to penetration depths and other issues. |

| ||

| ^^ Linear Interplation can take care of that problem. Logic can run at 30Hz, but it will look 'butter smooth' on super fast PC. Some things to keep in mind: Jitters don't only come from background tasks - application itself can be very much responsible for them. Any variation in frame delta will cause this - meaning one frame may take longer to render/process than another. This almost always happens because you usually never render/process same exact information every frame. This is inevitable, because delta time is INACCURATE! (you use previous frames delta, instead of current, thus your time is never actually right) Example of this would be a simple program rendering a random number of stuff every frame, from 10 to 10,000 - see if you can smooth this out :P BTW, MAX2D does not use any sort of geometry batching, so 'thousands of sprites' will actually create bus bottlenecks and jitters. Implement a 'vertex cache' of some sort to be able to push max geometry throught the system with few hardware calls. Cheers, Dima |

| ||

| BTW, MAX2D does not use any sort of geometry batching, so 'thousands of sprites' will actually create bus bottlenecks and jitters. Implement a 'vertex cache' of some sort to be able to push max geometry throught the system with few hardware calls. In case it helps anybody : Max2D also creates a seperate surface for every texture (whether a cell of an anim or normal image). If you have thousands of sprites stick them in the same pow2 texture and use SetUV to draw them (or search these forums for TAnim). |

| ||

| "In case it helps anybody : Max2D also creates a seperate surface for every texture (whether a cell of an anim or normal image). If you have thousands of sprites stick them in the same pow2 texture and use SetUV to draw them (or search these forums for TAnim). " This is not good....at all. I at least assumed the "LoadAnimImage" would be referenced from a single surface to eliminate texture state changes. errrr.. oh well, will affect older gpu's, not that big of an issue for newer gpu's. But at the least, make your images pow2 so it doesn't scale to the next size and waste videomem. |

| ||

| Yeah all mine are reduced to nearest pow2 where possible. LoadAnimImage does waste a huge fat chunk of VRAM. It's been a bit of an issue on my current game. It's pretty easy to waste 10MB of VRAM with a simple anim if you are not careful. |

| ||

| I've been trying to follow this whole thing about jitter. The jitter example with the vertical lines does seem to show that every now and then the line jiggles left and right a little bit off from what would be smooth. Would I be understanding it right to say: Because you use a Millisecs() timer to tell what the current time is, and the timer is only accurate to 1 millisecond, the time it gives you could be off by as much as almost 1 whole millisecond, which means that you think you're further ahead or behind the real time than you are - and this translates to object positions being up to 1 pixel slightly ahead or behind where they should be, and this expecially shows when you draw the object at its new position versus where it was in the previous frame - it could seem to move too little like a slowdown, or too fast like a speedup? Am I right? So then the real issue is the inaccuracy of reading the exact time due to not enough timer resolution, and the fact that it keeps jumping around by up to 1 whole millisecond? And I presume that while for much of the time you might be fairly close to what the real time is, every now and then for integer positions the time difference causes an overflow to the next millisecond, resulting in the object suddenly jumping a bit? Would it help to do a little loop:

t=Millisecs()

Repeat

t2=Millisecs()

Until t2>t

'We are now at the very beginning of the t2 millisecond

so that you are waiting to capture as close as possible the `switch over` from the last millisecond to the new one, so that in terms of `real time` you are then starting your logic update at the very `start of a milliscond` rather than `somewhere in the millisecond`? You would have to waste up to a millisecond of cpu time to detect this, but it would let you lock onto the change in timer values much more accurately. You could do some kind of very small-grained `useful` processing within the wait loop so it's not totally wasted. Surely this would almost entirely correct the whole jitter issue - other than when you're catching up from some other process eating cpu cycles? Or have I totally misundestood what is causing jitter and what jitter is? |

| ||

| Jitters or slightly jumpy animation is simply because the pc is not a dedicated game machine. (PC tasks like virus checkers, internet connections, etc, steal valuable resources from your apps.) Ironically, on a PC the faster the frame rate the more you will notice the jitters when/if they occur. If you lock your game at a slower frame rate, say 30fps, you pretty much have compensated for the occasional jitter automatically and wouldn't even need jitter correction. I have a test machine not connected to the internet, no memory resident programs, no virus checkers, etc. That machine runs pc games smooth as butter, but even on that pc there's still occasional jitter. |

| ||

| Angel Daniel: I tried something like that before but didn't get the resulted I'd expected for some reason, I may have made it wrong. Basically there are two main things that affect smoothness a) jerks caused by background tasks, these are normally quite large deviations in time elapsed and b) jitters caused by rounding anomalies on millisecs/pixels (moire effect) and also sometimes tiny variations due to background tasks. The code I wrote sorts out both. MGE: wow your framework is coming on nicely. |

| ||

| Can you explain what the rounding anomaly is on millisecs/pixels and why that causes a jitter? And what is the moire effect? |

| ||

| AngelDaniel - Check out GA's excellent first post, all of your answers are pretty much right there. ;) Windowed apps will jitter more than full screen apps btw. GA - Thanks! |

| ||

| So in a way, just as network lags requires tweening and interpolation and smoothing, jitter is basically a lag which needs the same attention? |

| ||

| http://en.wikipedia.org/wiki/Moir%C3%A9_pattern yeah jerks are lag caused by external tasks that need to be smoothed as best as possible. When I use the word jitter I was referring to smaller variations caused by millisecs being an integer and give values like 15,16,16,17,16,16,15,17 etc instead of 16.66 each frame. |

| ||

| How would you be getting these moire patterns in your game graphics? |

| ||

| Not in the actual graphics, I'm talking about laying Time (millisecs), which is one "grid", over the pixels on the screen (another grid). The two don't match up unless you move EXACTLY 1 pixel per second, so you get an "interference" effect in the movement which is a moire effect. |

|